I spent a good chunk of 2024 focused on multi-agent systems - contributing to AutoGen - an OSS framework for building multi-agent apps, and working on a book on the topic.

A lot has happened!

This post is an attempt to catalog some of the key events into themes, and a reflection on where things might be headed. The content here is likely subjective (my viewpoint on what was interesting) and is based on a list agent/multi-agent news items I curated over the last year.

TLDR: Five key observations from building and studying AI agents in 2024:

Enterprises are adopting agents, but with some caveats

Teams are building "agent-native" foundation models from the ground up

Interface automation agents dominated early commercial applications

A Shift to Complex Tasks and the Rise of Frameworks

Benchmarks reveal both progress and limitations

1. Enterprise Adoption of Agents in Products .. with Caveats

Many enterprises and startups have adopted the term “agents” in products, describing them broadly at systems that act on a user’s behalf with the goal of saving the user time and avoiding tedious/busy work. Some examples below:

Microsoft Copilot Agents : “These AI-driven agents assist users in performing a variety of tasks, working alongside you to offer suggestions, automate repetitive tasks, and provide insights to help you make informed decision”

Salesforce Agentforce : “Agentforce is a proactive, autonomous AI application that provides specialized, always-on support to employees or customers. You can equip Agentforce with any necessary business knowledge to execute tasks according to its specific role“

Sema4.ai agents. Sema4 goes a bit further than other offerings in their claims - “"RPA fall short when it comes to complex, knowledge-based work. They lack the ability to reason, make judgments, and adapt to real-world changes.”

LinkedIn HR Assistant : “Starting today, recruiters can choose to delegate time-consuming tasks to Hiring Assistant including finding candidates and assisting in applicant review, so they can focus on the most strategic, people-centric parts of their job”

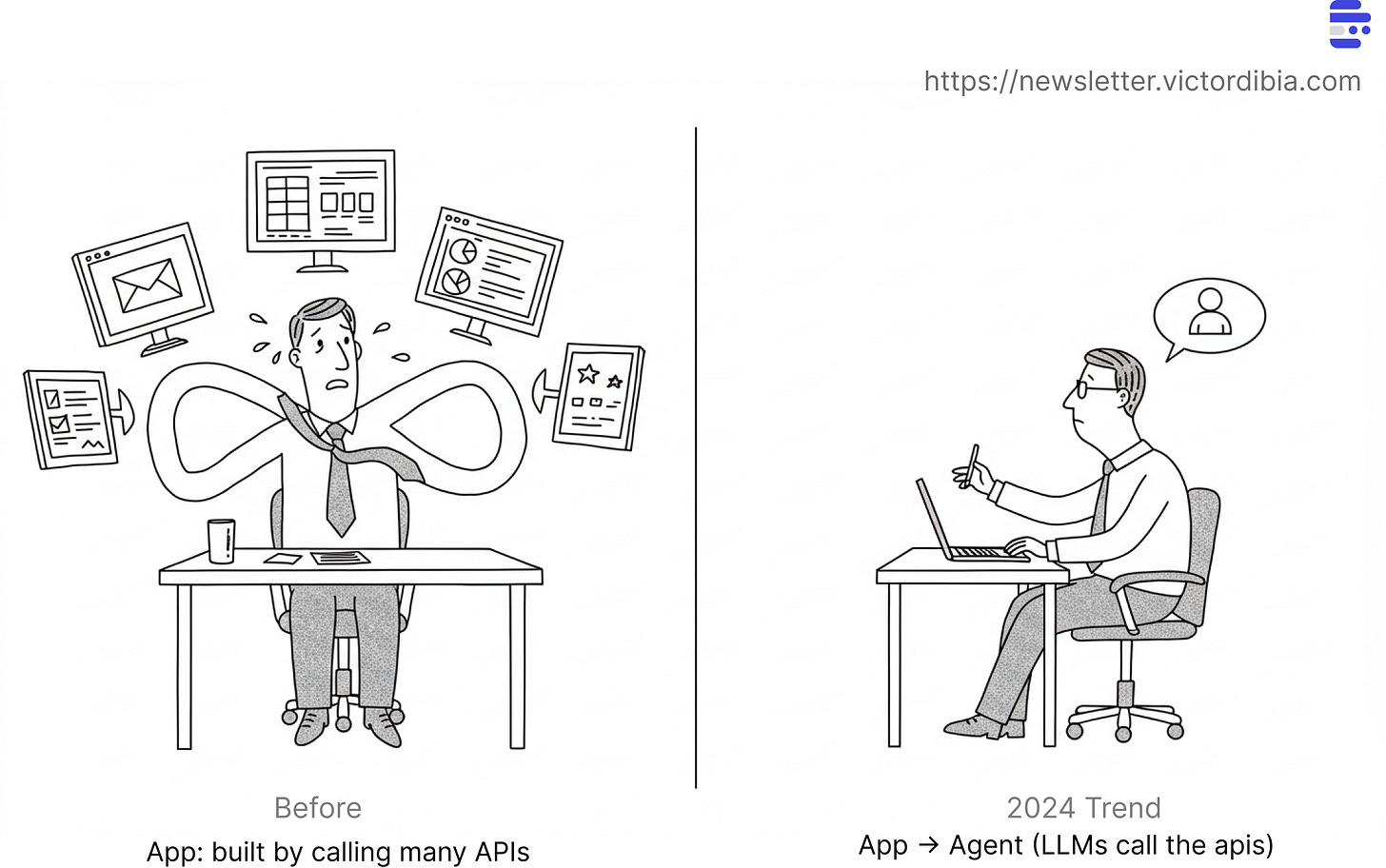

An important caveat here lies in how the agents are implemented. Most deployments use an LLM as a thin wrapper/orchestrator that "calls" existing APIs as tools. In essence, this trend represents a shift from a less manual approach " clicking fetch data, upload to Salesforce, and generate report buttons" to a more streamlined approach "saying generate report" while an LLM-enabled pipeline handles the clicking.

This is clearly the responsible approach - retaining the reliability of existing APIs while improving user experience by minimizing repetitive steps. It is also non-trivial, as ensuring reliable tool selection at production scale remains challenging. While this is still several steps removed from truly autonomous assistants (the holy grail of agents), it represents a first step toward that journey.

Before - Separate apps and apis that users interact with to complete business tasks in products

Trend: LLMs act as thin orchestration layers that translate natural language requests into multiple of API calls (minimizes clicks and intermediate tasks).

2. “Agent-Native” Foundation Models

An interesting development this year was seeing teams build foundation models specifically designed for agency. What does this mean?

Well, a good agent must do a few things well - reason through multi-step plans for tasks, act (use tools), appropriately leverage memory, and communicate with other agents.

The important trend here is that these agentic capabilities are now being “lifted” into the generative model itself.

OpenAI o1 emphasizes reasoning - planning, task decomposition etc that previously would have been spread across multiple agent actions. Right at the end of the year, (December 20), OpenAI announced (but not yet released) the o3 family of models - with even more impressive reasoning capabilities and test-time compute!

Test time compute allows models to dynamically allocate additional computational resources (reasoning/thinking time) depending on the complexity of the task.

The Gemini models from Google likewise makes progress here with Gemini 2.0 Flash’s native user interface action-capabilities, along with other improvements like multimodal reasoning, long context understanding, complex instruction following and planning, compositional function-calling, native tool use and improved latency

There were also advances in models that could do more things.

Multimodal output - The Gemini 2.0 Flash from Google is a natively multimodal input (text, images) and output (text, image and audio) model.

Huge!ChatGPT advanced voice mode:

Movie generation: Movie Gen from Meta a specialized movie generation model.

Lynn Cherny writes about Veo 2 - a video generation model from Google and many other creativity tools in this post .

This shift toward "agent-native" architectures reflects a growing understanding that effective agents need more than just typical language modeling capabilities - they need built-in capabilities for planning, tool use, and coordination.

Before: LLMs focused solely on language modeling. Capabilities like planning, tool use, and task decomposition/reasoning implemented external to the model.

Trend: Models now designed from the ground up with built-in capabilities for multi-step task decomposition, planning, tool use, and multimodal interactions.

3. Interface Agents Take Center Stage

If there was one application area that dominated commercial agent deployments in 2024, it was interface agents - agents that accomplish tasks by driving interfaces (web browsers, desktop OS etc).

Startups like Kura AI and Runner H released agent products that solve tasks by driving web browsers.

Microsoft's OmniParser improved how agents interact with GUI elements.

The new version of AutoGen (and AutoGen Studio) provides a WebSurferAgent preset that can solve tasks by driving a web browser.

ChatGPT screen sharing : “ChatGPT Advanced Voice Mode with vision can also understand what’s on a device’s screen via screen sharing”

Anthropic Claude Computer Use: “developers can direct Claude to use computers the way people do—by looking at a screen, moving a cursor, clicking buttons, and typing text.”

Project Mariner from Google “combines strong multimodal understanding and reasoning capabilities to automate tasks using your browser.”

Browser Use extension : “Make websites accessible for AI agents”

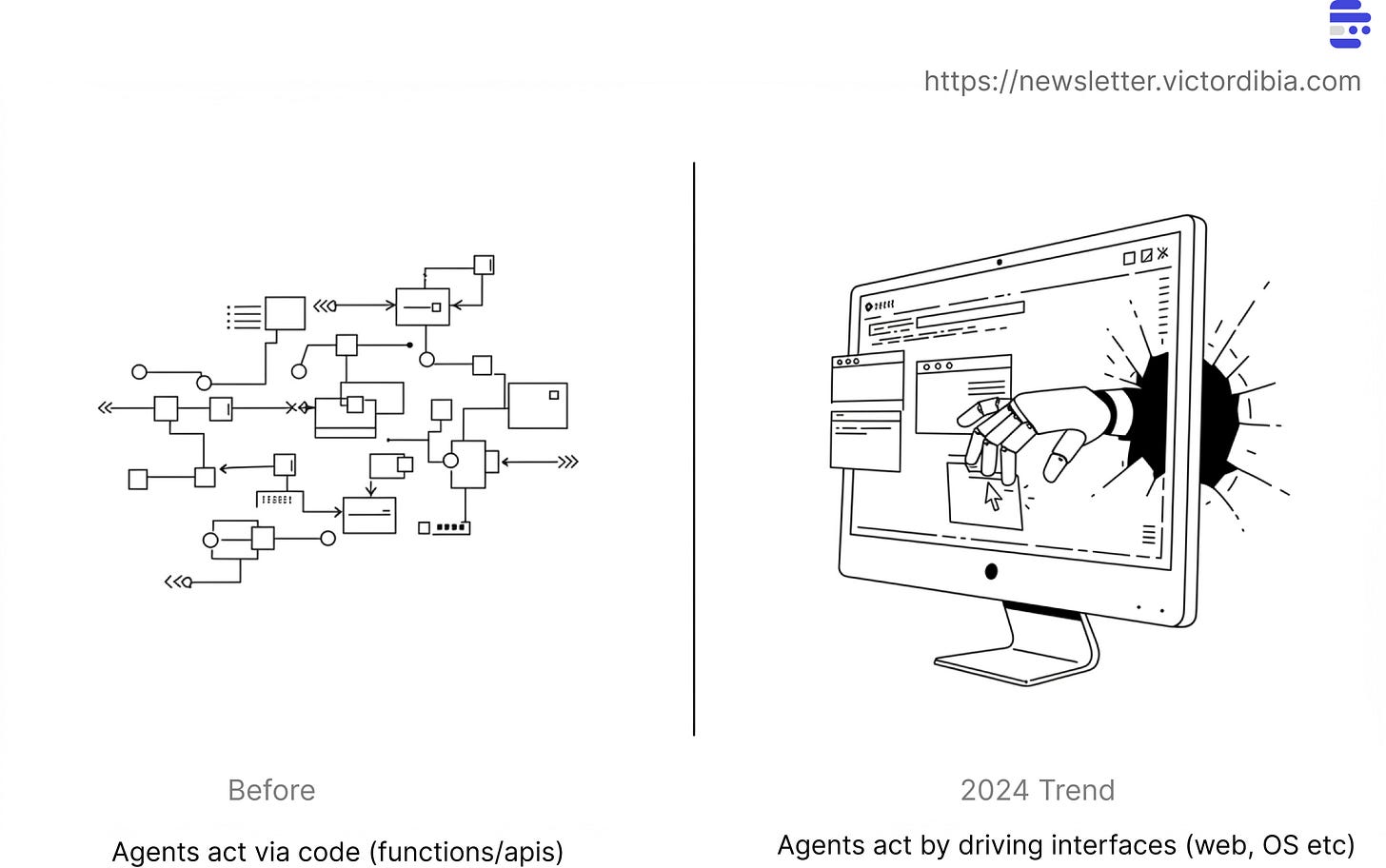

The focus on interface automation makes sense - it's where agents can deliver immediate value by automating repetitive tasks across existing disparate applications.

Before: The action space of agents was typically from programmatic tools and code execution

Trend: Direct manipulation of user interfaces (web, desktop) as the primary method for agent action.

4. A Shift to Complex Tasks and the Rise of Frameworks

Multi-agent systems or even just agents are emerging as an approach to solving problems. A common source of angst this year has been around the performance of agents and hence if they are more hype than substance.

In a langchain survey , 41% of respondents mention performance as the primary bottleneck to using agents.

In my opinion this confusion is rooted in a poor (or perhaps evolving) understanding of “when” to use a multi-agent approach and selecting the right pattern. In many cases, you probably do not even need an agentic setup.

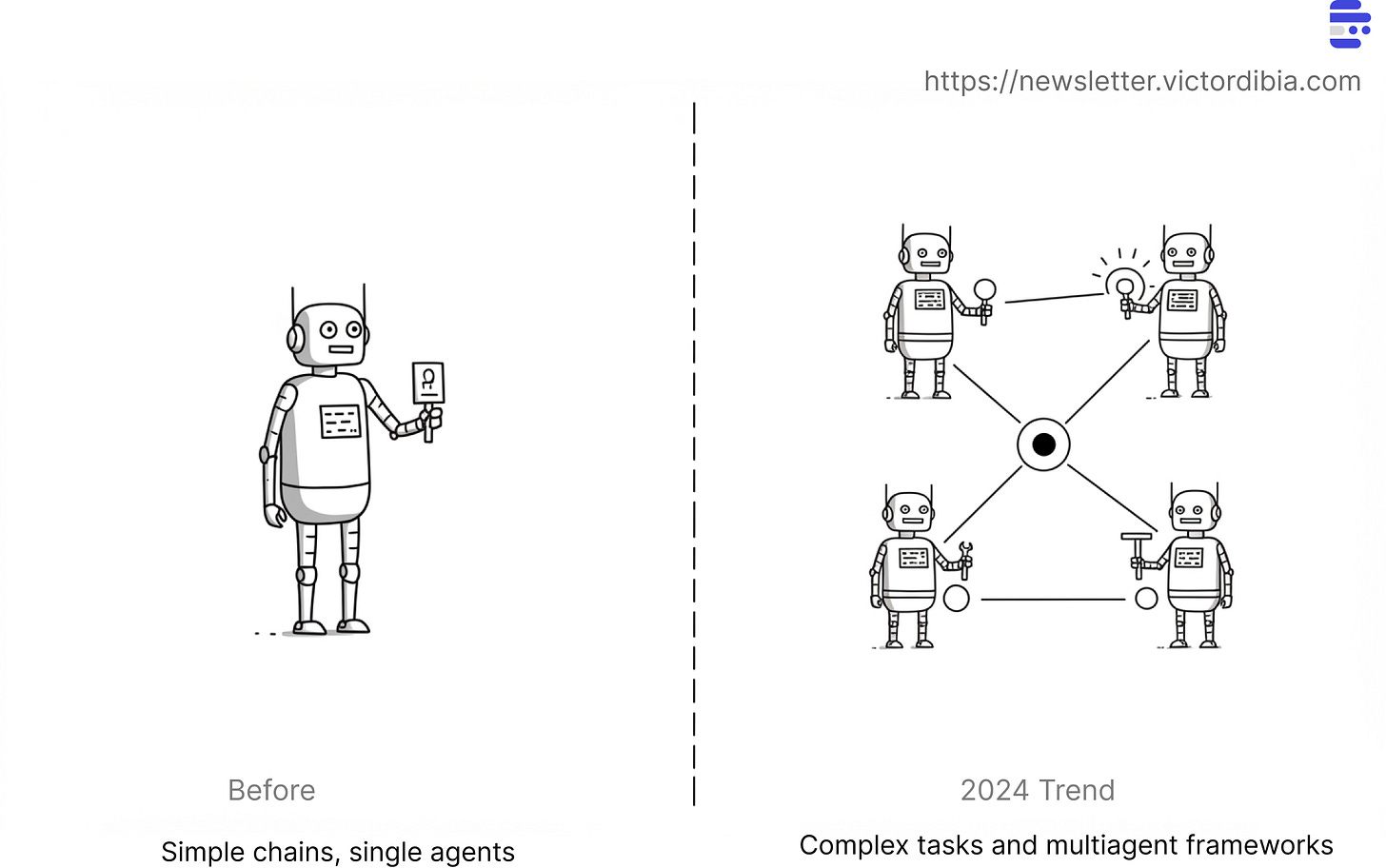

2024 also marked a transition from simple agent applications (like natural language weather queries using tools like LangChain) to more complex, autonomous use cases such as app development (Devin and Co), or even general purpose assistants (can do any digital task).

A critical challenge remains: selecting appropriate patterns for these complex tasks - specifically, how to implement behaviors including branching logic, reflection, metacognition etc. effectively. The importance of these patterns warrants deeper exploration in future discussions (I’ll write a separate post!).

To address these challenges, several AI frameworks, guides, and research papers emerged throughout the year, including:

AutoGen: an open-source framework for building AI agent systems

Magentic One: A high-performing generalist agentic system designed to solve complex tasks built with AutoGen. Uses a multi-agent architecture where a lead agent, the Orchestrator, directs four other agents to solve tasks. The Orchestrator plans, tracks progress, and re-plans to recover from errors, while directing specialized agents to perform tasks like operating a web browser, navigating local files, or writing and executing Python code.

AutoGen Studio : a no-code tool for prototying, testing and debugging multi-agent apps.

LangGraph : “Gain control with LangGraph to design agents that reliably handle complex tasks”

OpenAI Swarm (experimental) : Educational framework exploring ergonomic, lightweight multi-agent orchestration

CrewAI

Pydantic AI - a Python agent framework designed to make it less painful to build production grade applications with Generative AI.

This year, a core focus in AutoGen will be to make it easier to express various multi-agent patterns and provide the building blocks for this.

Before: Simple chains and tool-calling sequences patterns applied to simple tasks.

Trend: Sophisticated patterns for handling complex, multi-step tasks requiring planning, reflection, and coordination.

Which brings us to the last trend - evaluation

5. End-to-End Agent Benchmarks

Finally, benchmarks introduced in 2024 taught us a bit about the types of tasks we can expect autonomous multi-agent systems to tackle and how well they perform. The CORE-Bench framework for computational reproducibility, WebArena's focus on web-based tasks, and Microsoft's Windows Agent Arena all pushed the field to be more rigorous about measuring agent behaviors on tasks.

These benchmarks revealed both progress and limitations. While specialized agents showed impressive capabilities in narrow domains, general-purpose agents still struggled with complex, open-ended tasks - achieving only 14.41% success rates on end-to-end tasks in WebArena compared to 78.24% for humans.

Notably, towards the end of the year, we saw step function increases in performance on some benchmarks which tells us a bit about what might be coming in 2025. For example the newly announced (but not released) o3 model from OpenAI scores 87.5 on the ARC-AGI benchmark where the human threshold is 85.

Despite all their flaws (benchmarks are frequently not indicative of real world performance on your business problem), I am of the opinion benchmarks are still a good canary in the coal mine on the arrival of true AGI/ASI.

Before: Ad-hoc evaluation focused on individual model capabilities (language, reasoning, tool use)

Trend: Comprehensive task completion benchmarks measuring end-to-end agent performance.

Looking Ahead - What will Happen in 2025?

Predictions of the future are tricky - no one really knows what will happen. However, current trends and my experience over the last year suggest the following 3 things.

1. More improvements in models.

I expect a continuation of the trend of more capabilities being "lifted" into the model. For example, it is likely we'll see models that natively excel at adaptation/personalization - use memory efficiently by making smart decisions about what information to store, when to store it, how and when to retrieve it effectively.

Reinforcement learning on multi-agent trajectories to improve memory and external tool usage.

Reinforcement learning to improve multi-agent orchestration

2. Patterns Drive Reliability.

If 2024 was the year agents emerged as a viable approach to problem-solving, 2025 will be the year they become the defacto best performing (ideally reliable) solution some for specific problem domains. Much like how Convolutional Neural Networks (and their derivatives) became the standard for vision-related tasks.

I believe that convergence on a set of multi-agent system development patterns will get us there.

The evolution towards patterns will likely unfold in phases as we identify what works and what doesn't across different complexity levels. Phase 1 will focus on foundational capabilities - simple, focused tasks like visualization generation and file conversion that serve as building blocks for more complex applications. Phase 2 will tackle greater complexity, enabling advanced applications like comprehensive data analysis and company research, along with sophisticated tasks such as automated food ordering and flight booking. Phase 3 will focus on integration, introducing general-purpose assistants capable of handling all previous tasks reliably. These will be systems like the one Sam Altman alludes to and predecessors to early systems like Magentic One, incorporating lessons learned from earlier phases. As we optimize across the technology stack, successful patterns will emerge. These patterns will be standardized and incorporated into libraries and frameworks (like AutoGen), providing clear guidance on when to use specific approaches.

3. Agent Marketplaces (Declarative Agents)

We'll see the rise of reusable agents and agent marketplaces, particularly for common problems e.g., research tasks, content generation, app development etc. This ecosystem will likely include both commercial products from startups and open-source implementations. While this may create a challenging environment for startups, it should foster healthy competition and innovation.

We're already working toward this future at AutoGen, developing a gallery of declarative multi-agent teams that make this vision possible today.

Should Your Invest in Agents in 2025?

Should you or your business invest in agents?

Well, there are indeed a class of problems for which agents or multi-agent systems are a good fit. I call these complex tasks and write about 5 key characteristics of those types of problems in my upcoming book. Be clear on if your task indeed exhibits these properties.

After that, select the right tool for the job. How do you know the right tool?

Well, be disciplined in designing a careful evaluation harness. Start by building a simple baseline (which could be rules-based), then iteratively increase complexity - perhaps a simple agent pipeline. From there, explore frameworks to prototype more complex patterns as needed.

Throughout this process, choose solutions that meet your performance and cost goals, guided by your evaluation results. Remember that agents are just one of MANY tools in a developer's arsenal. The best tool is simply the one that solves your problem effectively.

And thats it! - Stay Updated

How do you stay in touch or updated ? I'll keep sharing what I learn through this newsletter and the upcoming book on multi-agent systems. Consider subscribing to the newsletter or preordering the book (if you like long form content and are happy to wait).

Other awesome Year in Review AI/Agent posts to consider reading:

Noteworthy research papers of 2024 by Sebastian Raschka, PhD

Did OpenAI Just Solve Abstract Reasoning? by Melanie Mitchell