Interface Agents - Building Multi-Agent Applications that Act via Controlling Interfaces (Browsers, Apps)

Issue #20 | LLM-Enabled agents that act by driving interfaces (e.g., fill in and submit a form, conduct search). Current approaches, challenges and use cases.

Agentic applications can assist users by acting on their behalf. The tools available to an agent can significantly impact the types (complexity) of tasks the agent can accomplish. These tools may be specific (e.g., an api call that returns the weather for a given location) or general e.g., a code executor that can be used to implement any action represented as code. This is the approach followed in tools like AutoGen [1], with some LLM models even fine-tuned generate code and use APIs [2]. However, some tasks are set in environments that are not easily driven by code—for example, interfaces designed for human interaction e.g., searching multiple websites, local apps etc to find the best flight tickets. To overcome this, an emerging pattern is to create agents that can plan and directly act on interfaces (e.g., clicking a button, typing text, scrolling) to complete tasks.

In this post, we will discuss these types of interface agents and cover the following:

Why Interface Agents?

Components of Interface Agents (Interface Representation, Action Sequence Planner, Action Executor)

Emerging Tools (startups like Adept, Mution, Open Source tools like AutoGen, Open Interpreter etc)

Open Challenges (Interface representation, evaluation, etc )

Use Cases (Form filling, data extraction, etc.)

In the example above, an AI agent (Adept AI) is tasked with processing an invoice attached to an email. This involves opening the attached invoices, extracting essential information such as invoice numbers and total costs, and subsequently entering this data into the company's accounts payable software for accurate record-keeping.

Edit: Since this post was written, there have been a few announcements in this area worth keeping up with:

October 2024: Anthropic Computer Use

Jan 2025: OpenAI Operator

Why Drive Interfaces?

Why do we need agents that can understand and act on interfaces? To provide context, the primary goal of agents is to assist users in completing tasks. Here are some examples of tasks phrased as natural language requests:

"Send an email to Victor tomorrow morning to inform him that I will call him."

"Add Contoso Inc to the TODO list on GitHub issue #234 in the Contoso Rocks repository."

"Find me an apartment on Redfin (a real estate listing website) that was built within the last 15 years and has not experienced more than a 20% price increase in the past five years."

"Create a P&L column in my Excel or Google Sheets report and insert the appropriate formula."

Some of these tasks exist in environments where users must take actions that do not have programmatic APIs. For instance, Redfin may not offer an API, or Excel may lack an API for creating finely-tuned columns. Even when an API exists, these interfaces have been fundamentally designed for human interaction and intuition—how the human perceives the information, how it is organized, etc.—in ways that cannot be captured or replicated via an API to represent those tasks. Moreover, depending on the user experience design, some tasks may require numerous clicks, and when there is a large volume to handle, it becomes tedious. An agent might not be faster, but it can undertake tasks that humans prefer not to do.

In such situations, having an agent that can drive interfaces is beneficial.

This concept is a powerful metaphor: anything on a computer is an interface.

Summary: Interface agents are best suited to tasks that cannot be represented as code or api calls, are designed for human interaction, or are tedious.

Components of Interface Agents

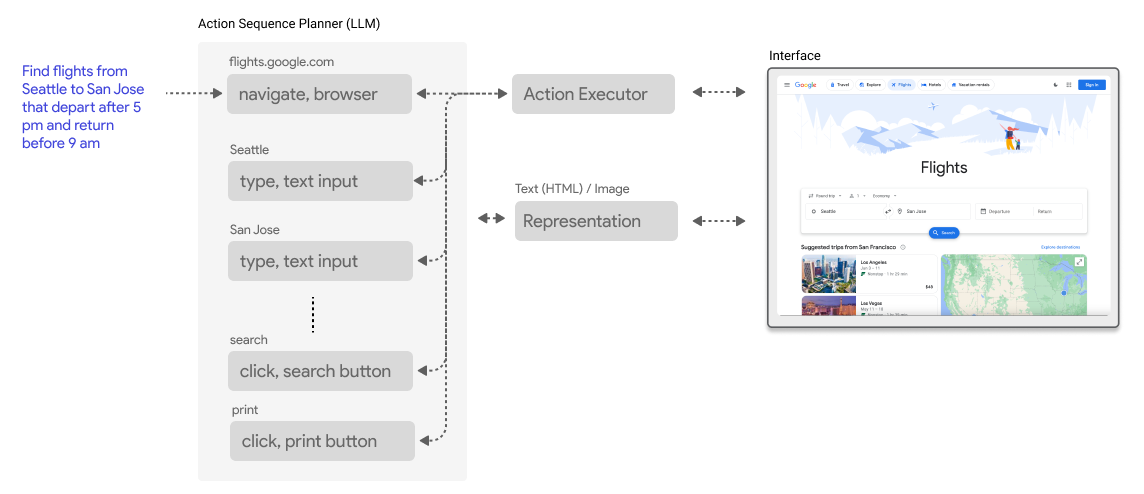

Agents can drive interfaces to address tasks through action sequences, - steps that include actions and target ([click, button 1]) executed on the interface to achieve a specific goal.

For example, to find return “flights from Seattle to San Jose that depart after 5 pm and return before 9 am”, an action sequence might involve the following steps executed mainly in a browser:

1. Navigate to a flight booking website, such as flights.google.com. [navigate, browser]

2. Input "Seattle" in the departure field. [type, input]

3. Input "San Jose" in the arrival field. [type, input]

4. Select the desired dates of travel. [click, dropdown]

5. Set the departure flight filter for departures after 5 pm. [click, dropdown]

6. Set the return flight filter for arrivals before 9 am. [click, dropdown]

7. Click the search button to find the flights that meet the criteria. [click, button]

8. Print results [click, button]While the process may appear straightforward, deriving a list of valid steps can be quite complex. For instance, there may be a "leaving" field instead of "departures," and the interface to select dates might require navigating through three or four submenus. Additionally, there might be a "find" button instead of a "search" button or even multiple “search” buttons requiring disambiguarion. An effective agent should be agnostic to these variations, disambiguate as needed and adapt/plan in-situ to address the task. See the example below of a browser agent from MultiOn.

To accomplish this, the agent requires a representation of the interface, an action sequence or plan comprising steps ([action, target]), as well as an executor that implements the actions on the specified interface targets.