If you've worked with AI agents, you probably know that your agent is only as good as the tools it can use. An agent with email and GitHub access can triage messages, extract attachments, respond to issues, and more—far outperforming one that just can answer questions using responses from an LLM.

In practice, tools are often challenging to build, test and integrate correctly.

Model Context Protocol (MCP) was released by Anthropic in November 2024 as a way to provide a unified protocol for this exact purpose. While it began as a way to provide context to LLMs (think RAG use cases where answers live in your custom DB), its recent popularity is, in my opinion, due to how easily it enables tool integration. While there seem to be lots of guides on how to integrate this with apps like Claude Desktop of IDEs like Cursor and Windsurf, there seems to be no guidance on how to “supercharge” your AI agents with MCPs.

Hint: You can do this in 2 lines of code with AutoGen.

In this post, we'll look at how to implement an agent with AutoGen and MCP tools.

What will you learn?

What an MCP Server is and how to set one up (or connect to an existing one)

How to seamlessly connect MCP to your agent

Important: The practical limitations and implementation challenges of MCP

What is the Model Context Protocol?

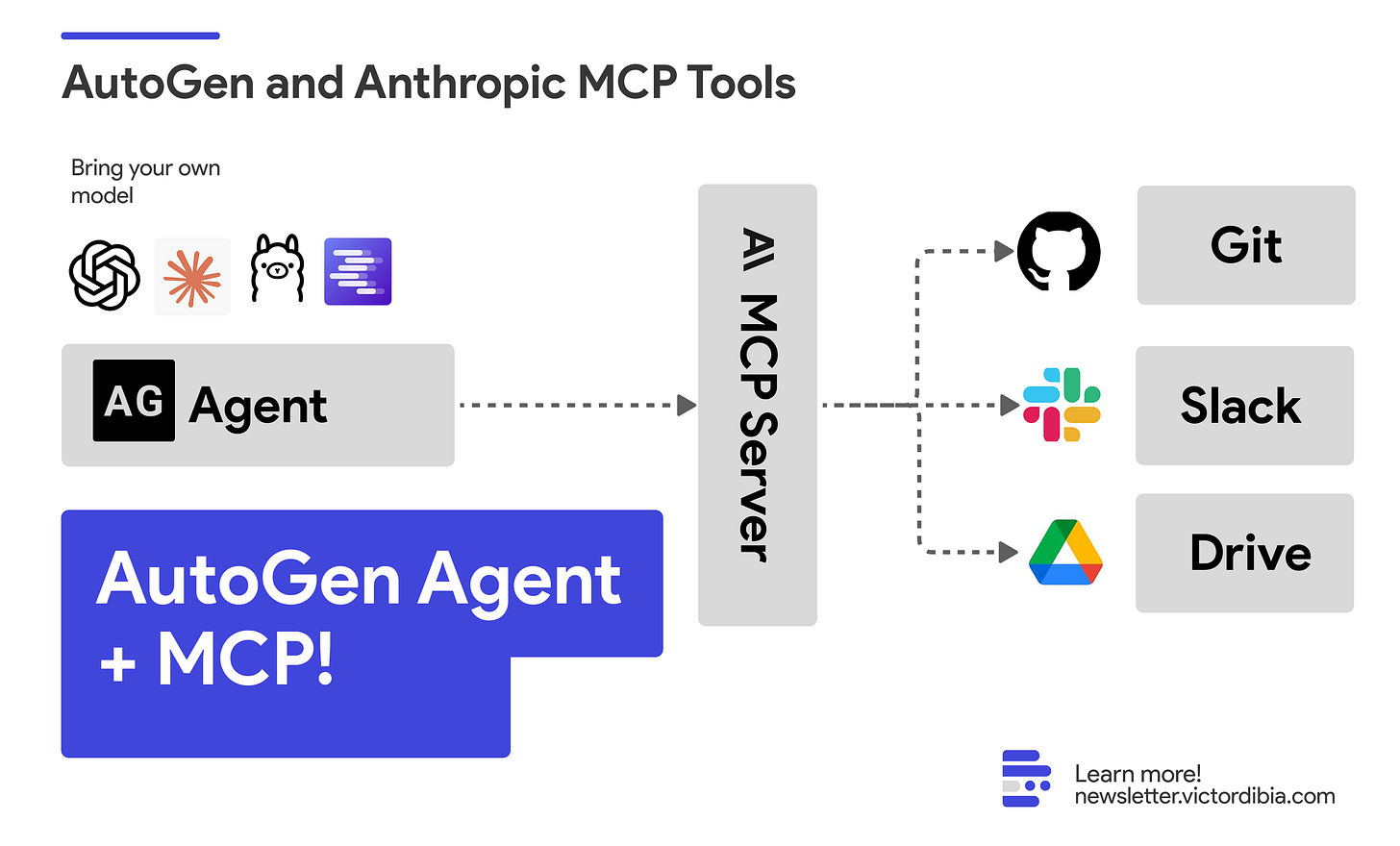

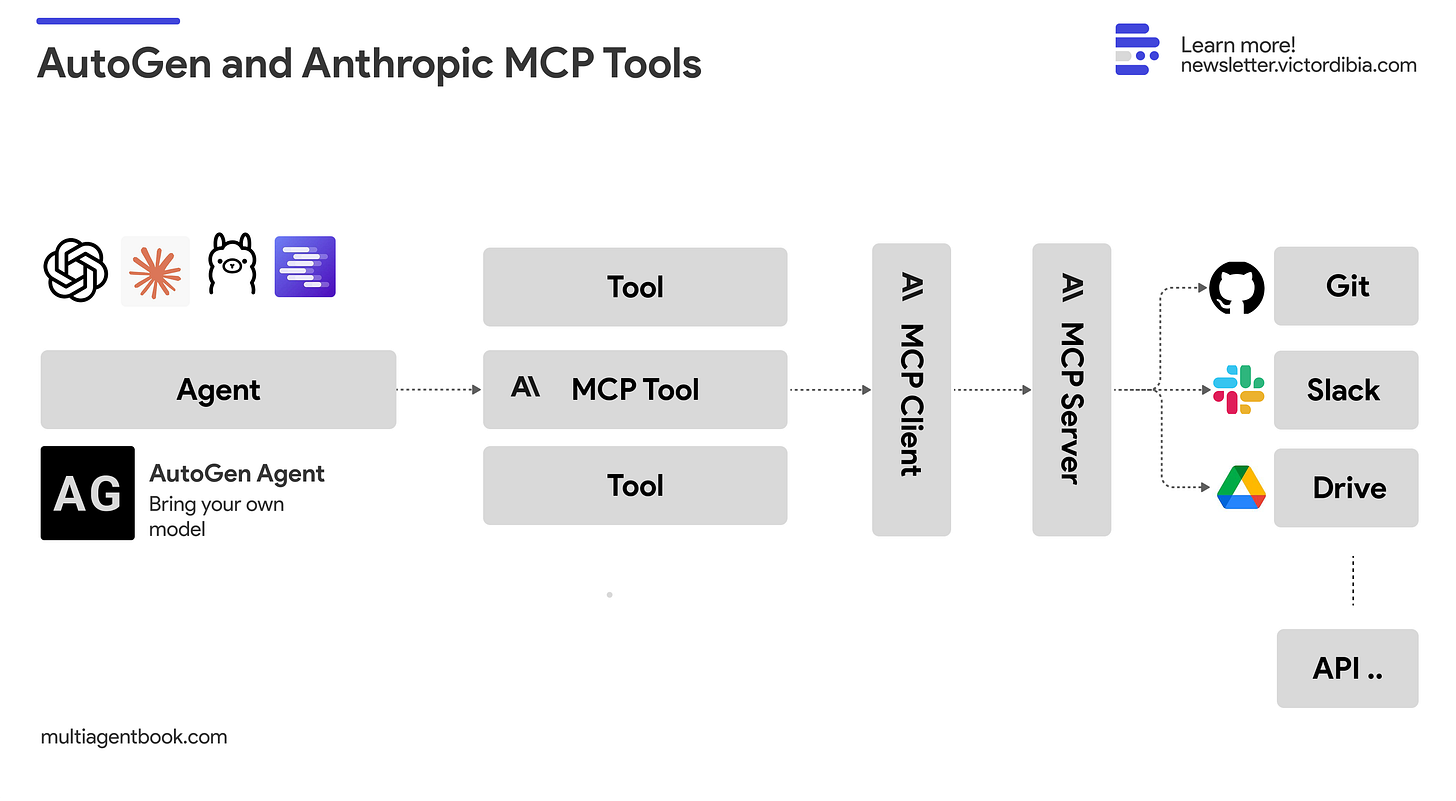

The Model Context Protocol is a client-server architecture that connects LLMs to external tools and data sources. As illustrated in the diagram, MCP creates a standardized interface between your AutoGen agent (the host) and various services like Git, Slack, Drive, and APIs.

MCP's architecture consists of:

MCP Hosts: Programs like Claude Desktop, IDEs, or AI tools that want to access data through MCP. In this example, your AutoGen agent acts as a host that wants to access external capabilities

MCP Clients: Protocol clients maintain 1:1 connections with servers

MCP Servers: Dedicated interfaces that expose tools and data to your agent

External Services: Git, Slack, Drive, APIs, and other systems your agent needs to interact with

The key advantage is offloading tool implementation to specialized servers. Instead of building direct integrations into your agent, you connect to existing MCP servers that handle the complex interactions.

MCP servers communicate with clients using JSON-RPC 2.0, with two main transport mechanisms:

Stdio transport: Uses standard input/output streams for local integrations and command-line tools

SSE transport: Enables server-to-client streaming with HTTP POST requests for client-to-server communication

Each server defines a set of capabilities including tools, which are executable functions with defined input schemas. The client discovers these tools through the tools/list endpoint and invokes them using tools/call.

For example, a server might expose tools like fetch to retrieve web content, analyze_csv for data processing, or github_create_issue for API integrations.

Creating an AutoGen Agent with Tools

Before diving into MCP integration, let's understand how traditional tool integration works with AutoGen. If you're not familiar with AutoGen basics, this serves as a quick refresher.

from autogen_agentchat.agents import AssistantAgent

from autogen_agentchat.ui import Console

from autogen_ext.models.openai import OpenAIChatCompletionClient

model_client = OpenAIChatCompletionClient(model="gpt-4o")

async def get_weather(city: str) -> str:

"""Get the weather for a given city."""

return f"The weather in {city} is 73 degrees and Sunny."

# Define an AssistantAgent with the model, tool, system message, and reflection enabled.

# The system message instructs the agent via natural language.

agent = AssistantAgent(

name="weather_agent",

model_client=model_client,

tools=[get_weather],

system_message="You are a helpful assistant.",

reflect_on_tool_use=True,

model_client_stream=True, # Enable streaming tokens from the model client.

)

# Run the agent and stream the messages to the console.

await Console(agent.run_stream(task="What is the weather in New York?"))

This example illustrates AutoGen's straightforward approach to tool integration:

Define a function (

get_weather) that performs a specific taskPass it to the agent via the

toolsparameterThe agent can now invoke this function when needed

AutoGen provides a FunctionTool class which inherits from the BaseTool interface. Python functions are automatically converted to a FunctionTool, which extracts the tool schema for use with an LLM. FunctionTool offers an interface for executing Python functions either asynchronously or synchronously. Each function must include type annotations for all parameters and its return type. These annotations enable FunctionTool to generate a schema necessary for input validation, serialization, and for informing the LLM about expected parameters. When the LLM prepares a function call, it leverages this schema to generate arguments that align with the function's specifications.

Adding MCP Tools to An AutoGen Agent

Integrating MCP tools with AutoGen is straightforward. The key component is the `mcp_server_tools` function, which bridges your agent with MCP servers. Under the hood, `mcp_server_tools` implements an `McpToolAdapter` which inherits from the AutoGen `BaseTool` class. This adapter leverages AutoGen's existing infrastructure for creating annotations and tool schemas, making the MCP tools seamlessly available to your agent.

In the example below, we will show how to use the MCP fetch server provided by the Anthropic team - A Model Context Protocol server that provides web content fetching capabilities.

import asyncio

from pathlib import Path

from autogen_ext.models.openai import OpenAIChatCompletionClient

from autogen_ext.tools.mcp import StdioServerParams, mcp_server_tools

from autogen_agentchat.agents import AssistantAgent

from autogen_core import CancellationToken

from autogen_agentchat.ui import Console

async def main() -> None:

# Setup server params for local filesystem access

fetch_mcp_server = StdioServerParams(command="uvx", args=["mcp-server-fetch"], read_timeout_seconds=100 )

tools = await mcp_server_tools(fetch_mcp_server)

# Create an agent that can use the fetch tool.

model_client = OpenAIChatCompletionClient(model="gpt-4o")

agent = AssistantAgent(name="fetcher", model_client=model_client, tools=tools, reflect_on_tool_use=True)

await Console(agent.run_stream(task="Summarize the content of https://newsletter.victordibia.com/p/you-have-ai-fatigue-thats-why-you", cancellation_token=CancellationToken()))

await main()and the result

---------- user ----------

Summarize the content of https://newsletter.victordibia.com/p/you-have-ai-fatigue-thats-why-you

---------- fetcher ----------

[FunctionCall(id='call_ssi0W4x0cTmpd02Ii2c4cP28', arguments='{"url":"https://newsletter.victordibia.com/p/you-have-ai-fatigue-thats-why-you"}', name='fetch')]

---------- fetcher ----------

[FunctionExecutionResult(content='[TextContent(type=\'text\', text=\'... If we focus on AI related sub categories (Computer Vision, Machine Learning, NLP, Robotics, AI, and Information Retrieval), there were:\\n\\n\\n\\n<error>Content truncated. Call the fetch tool with a start_index of 5000 to get more content.</error>\', annotations=None)]', name='fetch', call_id='call_ssi0W4x0cTmpd02Ii2c4cP28', is_error=False)]

---------- fetcher ----------

The article "You Have AI Fatigue – That's Why You" by Victor Dibia discusses the concept of "AI Fatigue," a phenomenon where individuals and organizations feel exhausted due to the relentless pace of AI advancements. The author shares a personal story of forgetting his password, symbolizing cognitive overload. The rapid development in AI, fueled by high numbers of research papers and constant paradigm shifts, has become overwhelming for many in the field. This period began with the release of ChatGPT around November 2022 and has continued with little sign of slowing down. It highlights the mental, emotional, and operational toll of keeping up with AI advancements.Under the hood, mcp_server_tools does several important things:

It wraps the MCP tool, extracting parameters so it can be used as a standard tool callable by any LLM

It spins up an MCP client session based on the server parameters (in this case, "uvx mcp-server-fetch")

It executes the server command, instantiates a client session, and runs

list_toolsto get the available tools schemaIt passes this schema to the agent, making the tools available for use

In this example, we're using Stdio transport (one of MCP's supported transport mechanisms) which enables communication through standard input/output streams—ideal for local integrations and command-line tools.

Note: You can swap out the model_client with any model client that supports function calling!

The beauty of this approach is its flexibility. You can define any MCP server and make it available to your assistant agent using any model client that supports function or tool calling, including OpenAI models, Alibaba models, and local models like Qwen.

In the sample below, let us simply pass in the tools from our fetch MCP server to a local Qwen 2.5 7B model (running on my local machine).

from autogen_ext.models.openai import OpenAIChatCompletionClient

from autogen_ext.tools.mcp import StdioServerParams, mcp_server_tools

from autogen_core.models import ModelInfo , UserMessage

qwen_model = OpenAIChatCompletionClient(

model="qwen2.5-7b-instruct-1m",

base_url="http://localhost:1234/v1",

model_info=ModelInfo(vision=False, function_calling=True, json_output=False, family="unknown"),

)

# Setup server params for local filesystem access

fetch_mcp_server = StdioServerParams(command="uvx", args=["mcp-server-fetch"])

tools = await mcp_server_tools(fetch_mcp_server)

result = await qwen_model.create(messages=[UserMessage(source="user", content="Summarize the content of https://newsletter.victordibia.com/p/you-have-ai-fatigue-thats-why-you")], tools=tools)

print(result)and the result of this..

finish_reason='function_calls' content=[FunctionCall(id='409591546', arguments='{"url":"https://newsletter.victordibia.com/p/you-have-ai-fatigue-thats-why-you","max_length":5000,"start_index":0}', name='fetch')] usage=RequestUsage(prompt_tokens=416, completion_tokens=55) cached=False logprobs=None thought=NoneWe see that it generates a tool call message correctly. We also swap out the Qwen model in our earlier agent example to get very similar results.

Note: The MCP server is started by executing the uvx command for MCP servers built in python. I found these worked best for me (probably that because I have this setup). Servers can also be built in typescript and run using the npx command. I tended to have more errors setting these up (npx command not found etc).

Note: MCP is Not There Yet - Current Issues

Despite its promising architecture, MCP has several practical limitations (today) that developers should consider:

Edit - I wrote more about this here

Complex Setup and Testing Limitations

MCP can potentially work well if you have time to design an MCP installation package and scale it as a service your organization can consume. I can see arguments for its centralized credential management as a managed independent service.

However, if you're looking for a clean way to give agents access to tools bundled directly with the agent, MCP might be unnecessarily complex. For example code required to set up a simple MCP webpage fetch tool is significantly more than a direct function implementation.

There's no straightforward way to test MCP tools with LLMs that support tool calling (they do have an inspector, which I found buggy). Testing options seem limited to:

Building a server using TypeScript or Python SDK (substantial work)

Bundling as an npm or PyPI package for installation

Bundling as a local binary and providing its absolute path in the execution command

This creates a poor developer experience in my opinion.

They do have an inspector tool, but I still found early (kept showing error connecting to a reference fetch mcp which seemed to work correctly when used with an agent).

Note: The MCP client operates within your host application, which can affect your app's performance and stability. This embedded design may introduce latency and cause threading conflicts when your application uses asynchronous processing. For example, if your app runs in a background async thread, you might encounter synchronization errors between this thread and the MCP client's communication with its server.

Documentation Biased Toward Claude Desktop

As I dug in, a significant criticism of MCP is that its documentation heavily focuses on integration with Claude Desktop, which IMO is not what most developers need or at least system builders. The bulk of examples and videos showcase existing integrations (MCP in Cursor, Windsurf, etc.) rather than helping developers build their own host applications.

When trying out a new protocol or tool, developers typically expect:

A clear tutorial with copy-pastable code

Instructions to run a local version

Examples showing how to integrate it into their own host applications

Instead, the documentation primarily walks through integrating with Claude Desktop with almost no examples showing how to connect MCP to even a simple Python app where an Anthropic model client calls tools fetched from a server. This approach is disappointing for a public protocol that should cater to developers building custom integrations.

Don't get me wrong - it is possible, just not well illustrated. This gap has led to the creation of third-party tools like MCP CLI and MCP Bridge (bridging OpenAI API and MCP) - even more levels of indirection!

Limited, Buggy Reference Implementations

I found several reference implementations to be buggy, with other developers reporting similar experiences.

One of MCP's promises is providing a repository of off-the-shelf usable tools, but the current reality falls short. The official reference servers are limited to basic functionality like Brave search and webpage fetching, nowhere near the comprehensive toolkit many developers need.

This is reminiscent of the OpenAI GPT’s store project (November 2023) - custom versions of ChatGPT that combine instructions, extra knowledge, and any combination of skills. It didn’t go very far.

These issues make me question the basis for articles like this that claim “MCP has Won”.

Conclusion

The idea of a model context protocol is great! Kudos to the engineers at Anthropic making this happen—we are all enriched for it. But, like many other things in the AI space (AutoGen included), it is still very early.

If you hadn't tried MCPs and simply read all of the enthusiastic updates shared recently, you might be forgiven for thinking MCPs are some golden bullet - an existing repo of tools that you can plugin in a single line and it just works. Which is what we all want—something that just works.

In reality, as usual, there's more to it.

Implementation/Integration gaps: Existing MCP server implementations often provide only basic functionality that won't match your specific business requirements. For example, the Google Drive integration merely lists files when your application likely needs much more comprehensive capabilities.

Security concerns: 3rd party MCP services like Composio help broaden the set of available MCP servers (They claim +250 production ready servers).

There are two key considerations here - the quality of the implementation itself (e.g., potential for data leaks, performance or latency issues etc), and the risk of sharing authentication credentials with third-party services that proxy them to other platforms (e.g., you’d need to likely share auth tokens with a platform like Composio to call tools like GDrive on your behalf).Customization and control: Since tools represent your organization's competitive advantage or "secret sauce," relying on generic third-party implementations limits your ability to tailor them to your exact business processes.

For now, I'd recommend experimenting with MCP to understand its potential while being realistic about its current limitations.

I’d also recommend using the BaseTool, FunctionTool interface provided by AutoGen for easy testing and debugging your applications instead of MCPs.

The protocol shows promise, but requires significant improvement in developer experience before it can become the standardized "USB-C for AI" that it aspires to be.

Acknowledgment: Shout out to Richárd Gyikó who helped implement the MCPToolsAdapter in AutoGen.