I am quite excited to announce an official release of a new version AutoGen Studio (AGS) - a low code tool for prototyping and debugging multi-agent systems. This post is meant to provide an overview of features and what you can do with the tool.

Feedback is most welcome!

TLDR

Overview (AGS is now based on the new v0.4 AutoGen AgentChat API)

Installation

Quick Start (Running Your First Agent)

Creating Teams (Gallery, Team Builder)

Example use cases (Deep Research, Web Agents)

Summary of key features, Roadmap

Overview

AutoGen Studio is a developer tool

First and foremost, AutoGen Studio is a developer tool - think of it as an extension of your development environment with capabilities that target multi-agent development. Crafting and debugging multi-agent systems can get challenging, and the space of configurations is exponential. AGS is meant to provide an environment where you can manipulate many configurations, explore them through composition, and review the results.

While it does have a GUI, it is currently not an end user tool or a turnkey product. Some level of development and customization is needed to get the best out of it!

As of January 2025, AutoGen Studio has been rewritten on the new v0.4 API.

AutoGen is built on the high-level AutoGen AgentChat API and inherits all of its key components/abstractions. If you are not familiar with the API, please see this introductory article.

The AgentChat API (and AGS by extension) is built around Teams as its fundamental building block. A Team represents a complete configuration of how agents work together to solve tasks, currently implementing several GroupChat team patterns. Each Team consists of Agents that can utilize LLMs, tools, and memory components. Every Team also includes termination conditions that determine when the task is complete. Think of a Team as your complete, runnable unit - it packages up everything needed for your agents to collaborate effectively on a given task.

Note: Currently the team presets in AgentChat support a GroupChat autonomous execution pattern where agents solve tasks by participating in a conversation. This is different from a chain/workflow pattern where each step and order of execution is predefined.

An example of an AgentChat team in python is shown below:

# imports

def calculator(a: float, b: float, operator: str) -> str:

# .. calculator implementation

async def main() -> None:

model_client = OpenAIChatCompletionClient(model="gpt-4o-mini")

termination = MaxMessageTermination(

max_messages=10) | TextMentionTermination("TERMINATE")

assistant_agent = AssistantAgent(

"assistant", model_client=model_client, tools=[calculator])

team = RoundRobinGroupChat([assistant_agent], termination_condition=termination)

await Console(team.run_stream(task="What is the result of 545.34567 * 34555.34"))

asyncio.run(main())Installation

If you are new to AutoGen, the general guidance is to first install a Python virtual environment (venv or conda). This helps prevent dependency conflict and other issues. Please do it!!

python3 -m venv .venv

source .venv/bin/activate. # activate your virtual env.. or use condaThen install autogenstudio from pip.

pip install -U autogenstudioNext, set up an API key. If you do not have an OpenAI API key, no worries - we can add that later. Just note that you can't do much until an LLM model key is added.

export OPENAI_API_KEY=sk-xxxxxxxxxxxIf you are using Windows, I highly recommend using WSL as most of the functionality is tested on Linux.

Finally, to run the tool:

autogenstudio ui --port 8081 --appdir ./myappIn your browser, visit http://localhost:8081/, and you should see the AutoGen Studio interface! Step 1 done!!

Quick Start

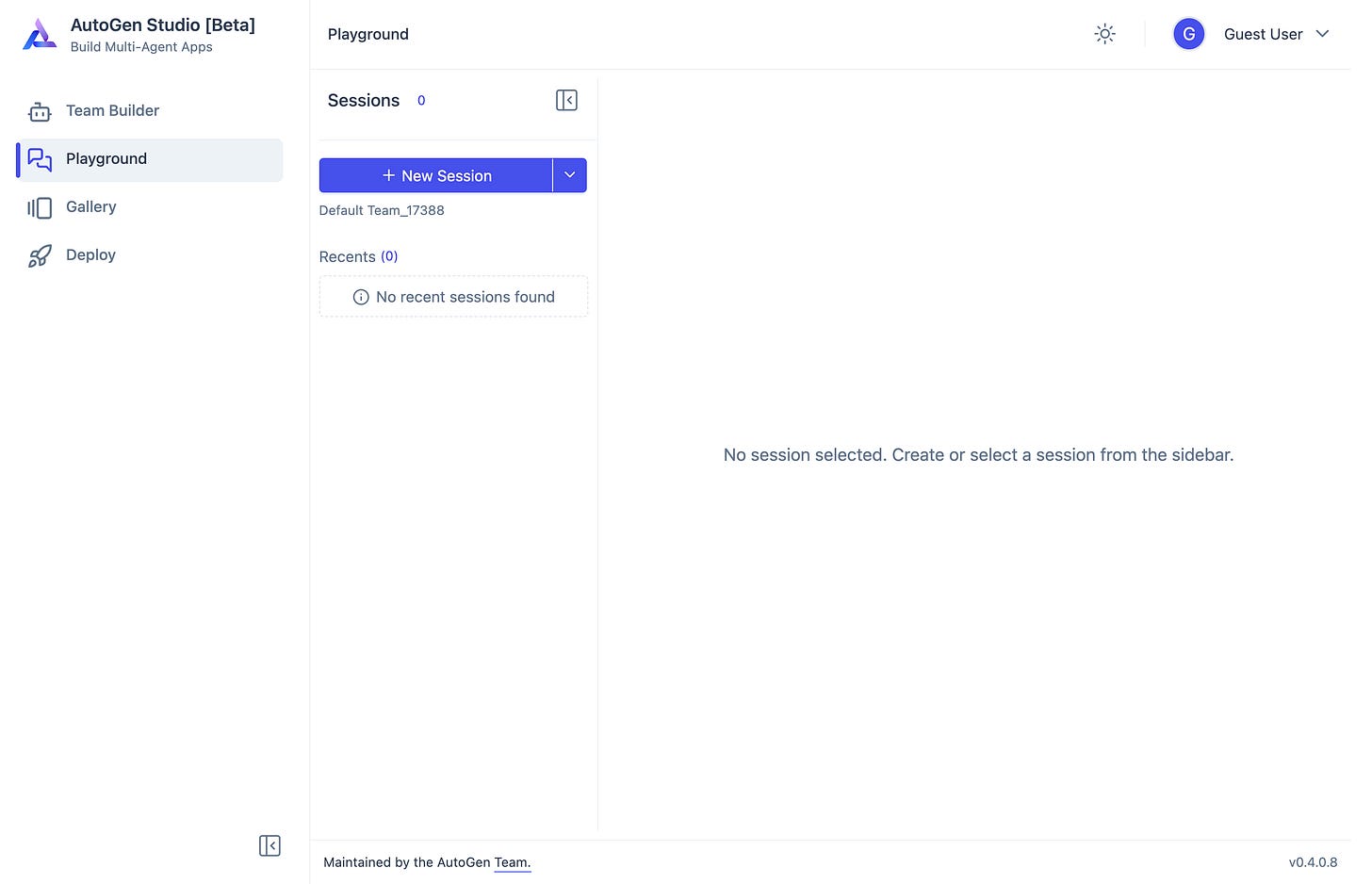

The primary interaction/test space in AGS is the playground. Interactions here can be grouped into sessions.

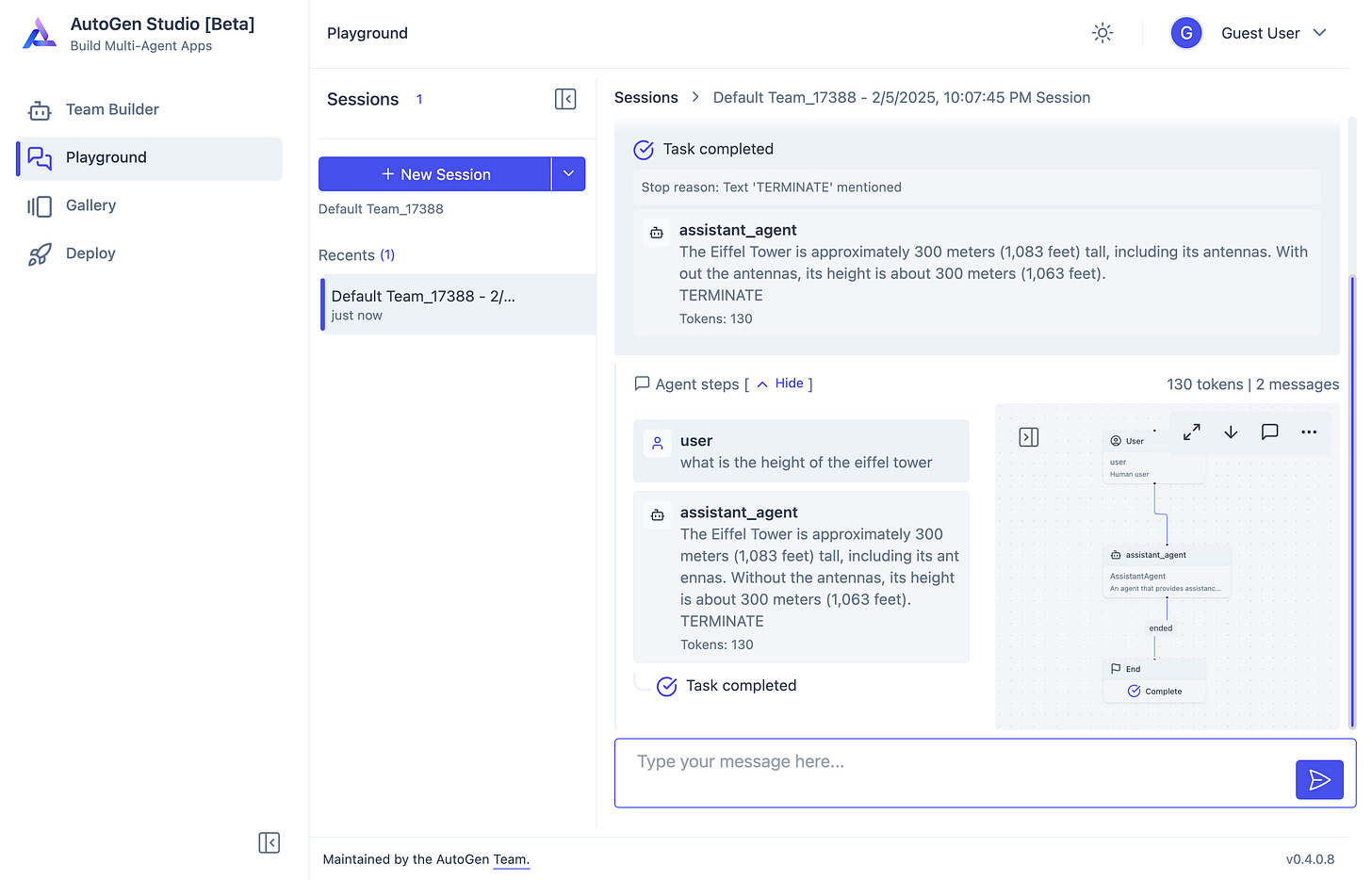

On first open, a default team with two agents is created for you. You can create a session and then give natural language tasks to your team. Assuming you have set your OpenAI API key, you can run the default task ("what is the height of the Eiffel tower") and should see a result that looks like the screenshot below:

Congratulations - you have run your first team with AutoGen Studio! You probably noticed a few things:

While the team was running, there was a cancel button to cancel the run (pretty cool!)

As the agents respond, each step they take is streamed to the UI and visualized as a graph on the right showing the control flow of messages (with token usage!)

Using Non-OpenAI Models

Note: While this example uses an OpenAI model, you can also use a local model e.g., LMStudio, Ollama or vLLM. Any model provider that offers an OpenAI-compliant API can be used by simply updating the model client fields.

An example configuration for a local LM Studio model is shown below. You can add this configuration to the JSON configuration of your team (more on that below).

{

"model": "TheBloke/Mistral-7B-Instruct-v0.2-GGUF",

"model_type": "OpenAIChatCompletionClient",

"base_url": "http://localhost:1234/v1",

"api_version": "1.0",

"component_type": "model",

"model_capabilities": {

"vision": false,

"function_calling": true,

"json_output": false

}

}Creating Teams

So where did the default team come from and how can we create new ones?

AutoGen Studio has Gallery and Team Builder sections that help with the creation (and distribution) of teams.

Gallery

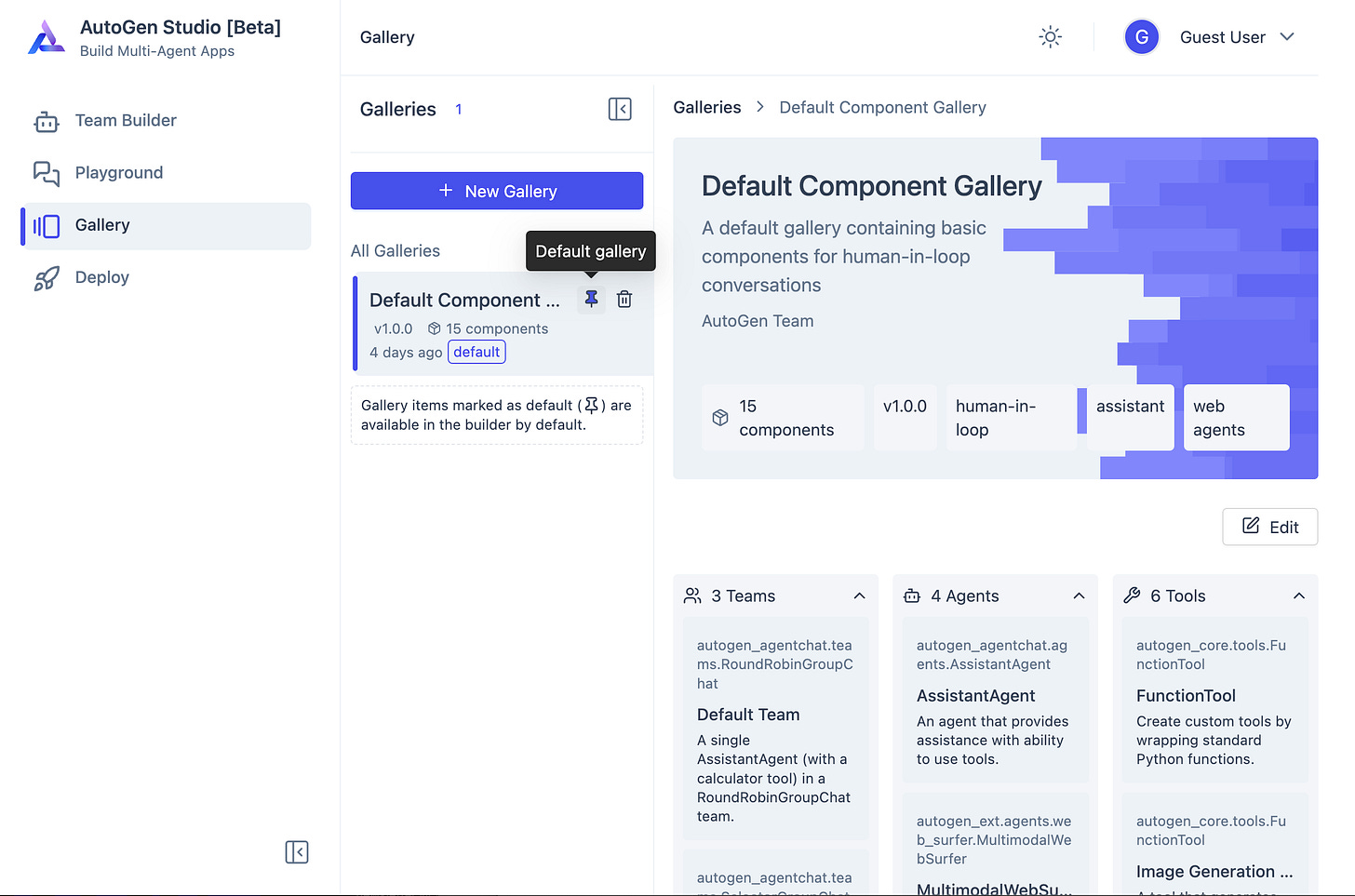

A Gallery is a collection of complete team specifications as well as lower-level components such as agents, tools, models, and termination conditions. Your AGS instance allows you to create multiple galleries (import from a URL, paste, drag in a file) and set any of these items as the default gallery. Items from this default gallery show up in the team builder UI.

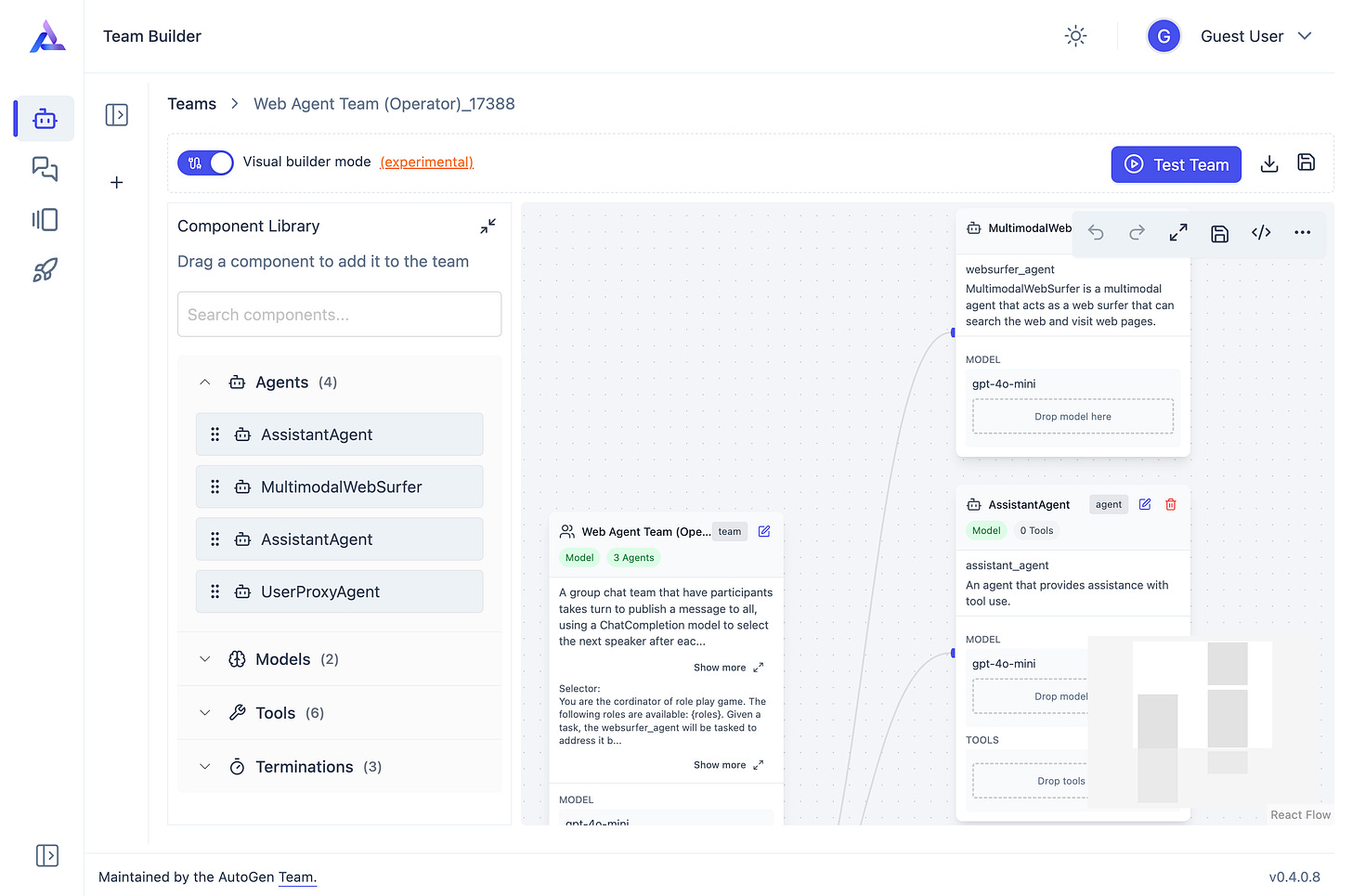

Team Builder

AGS uses a declarative representation (JSON) for a team and all its subcomponents. This configuration specification is also reused across the entire AutoGen framework enabling interoperability (you can export your teams from python and run them in AGS).

The Team Builder view offers a sidebar with teams you have created and teams that are shown from a default gallery of teams that ship with AGS. You can click the "New Team" button to create a new default team or create a default team from any of the teams in the gallery.

Component Library

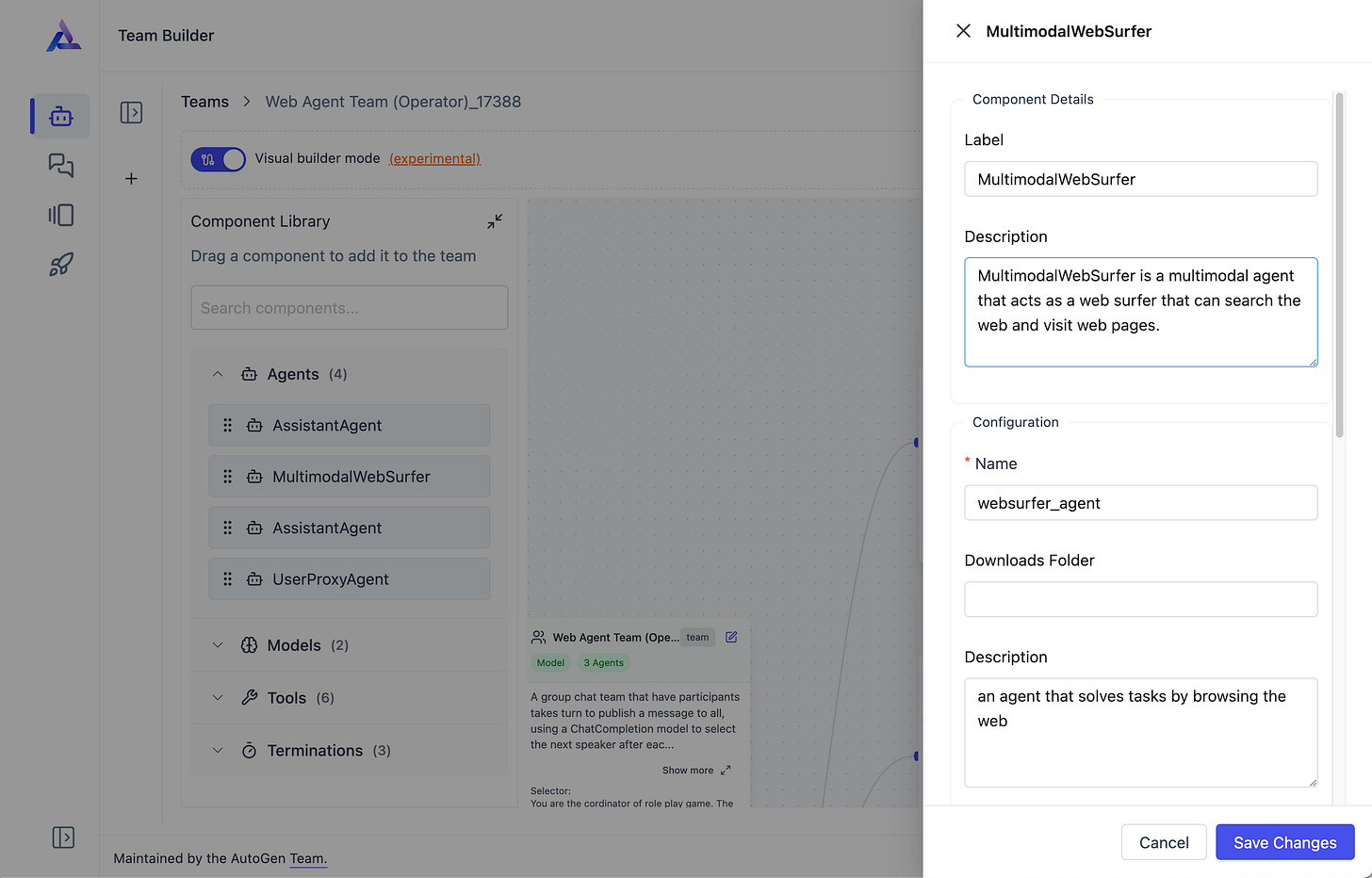

The Team Builder view also has a component library panel where a list of components (agents, models, tools, termination conditions) are shown from the selected default gallery.

Simply put, you can drag agents, models, and termination conditions to a team, and drag tools and models to agents. Each component has "drop zones" that indicate what type of draggable items they can receive.

Note: Components in the component library are not meant to be edited directly. Think of them as templates that can be used to bootstrap your team design. However, you can modify the default gallery (in the gallery view) and those changes will be reflected in the component library.

Editing A Team

There are two ways to edit a team in Team builder.

You can switch the visual builder toggle to view the raw underlying JSON specification for the team. Only do this if you are familiar with the correct syntax.

You can use the node diagram UI. Click the edit button on the top right of each node. This will pop up a UI with all the fields. Some fields are nested and you can click/drill down to make edits. Click save changes for each edit.

Using Python Teams in AutoGen Studio

Yes, this is possible. All you have to do is write the agent as usual and then save it to a JSON file by calling .dump_component().model_dump_json. This will work for properly configured components.

with open("research_team.json", "w") as f:

f.write(team.dump_component().model_dump_json(indent=2))Similarly, you can build/test your agent team in AGS, download the JSON specification and load that in Python:

Team.load_component(team_config)Use Cases

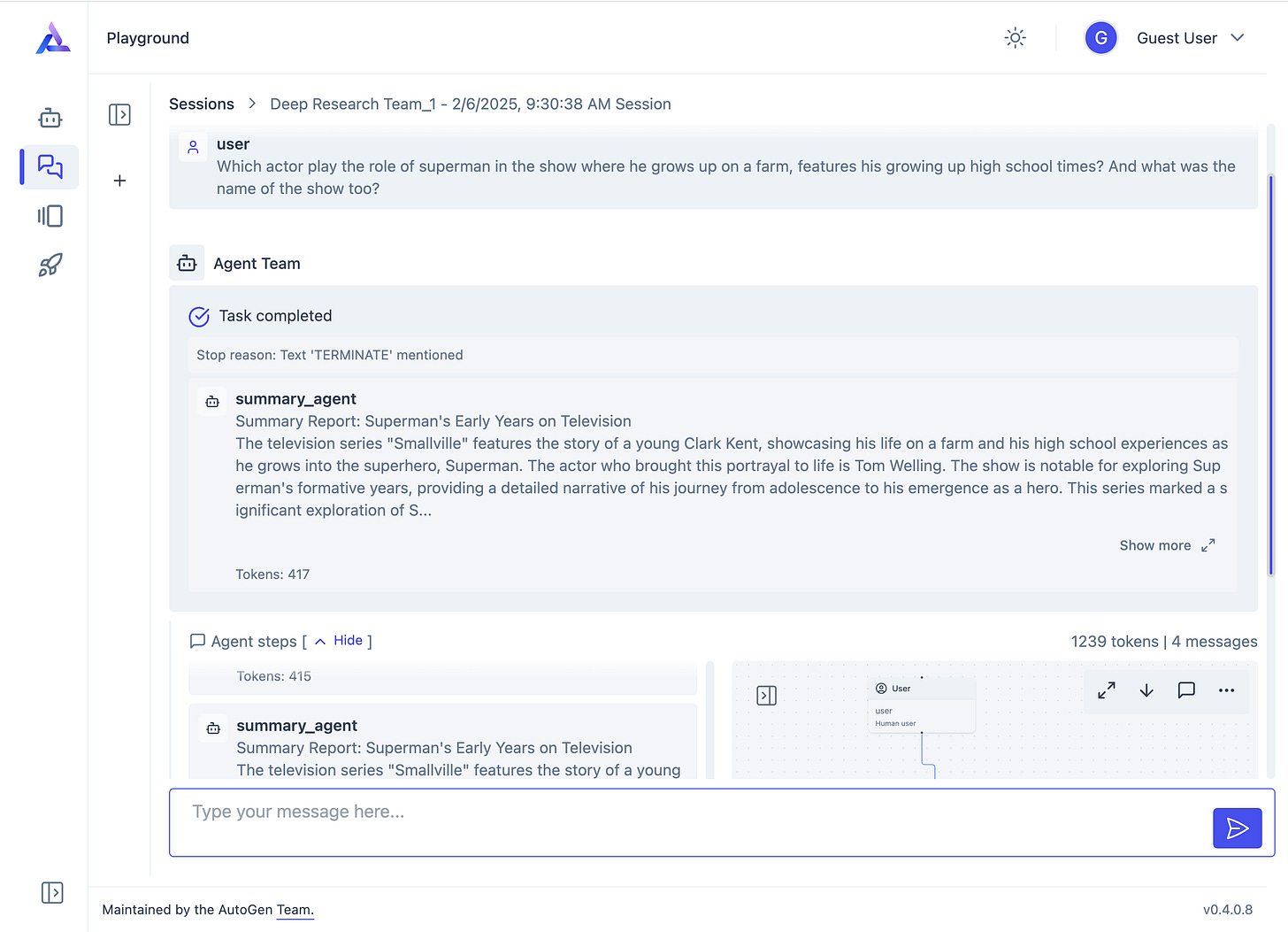

OpenAI recently released the Operator Agent (addresses tasks using a web browser) and the deep research agent (uses tools across multiple steps to solve tasks). Well, we can replicate those easily in AutoGen Studio!

In fact, they come preloaded with AGS. Some interesting queries/tasks you can try:

Web Agents:

"Go to Amazon and find me the prices and links of workout earbuds under 50 USD?"

Deep Research Agent:

"Which actor played the role of Superman in the show where he grows up on a farm, features his growing up high school times? And what was the name of the show too?"

Note: The prompts for the agents in these teams could certainly be improved. If you see behaviors that do not align with your use case (e.g., user delegation occurs too frequently), consider modifying the prompts/system message for the agents.

Note: The prompts for the agents in these teams could certainly be improved. If you see behaviors that do not align with your use case (e.g., user delegation occurs too frequently), consider modifying the prompts/system message for the agents.