Top 5 AI Announcements (and Implications) from the 1st OpenAI DevDay

Issue #15 | Cost reduction, new models, new developer APIs. What does this mean for developers, and the types of new applications that can now be built with ease?

OpenAI has established itself as a clear leader in the generative AI space. Firstly, the GPT models demonstrated sophisticated capabilities in complex language modeling. Then, DALL·E set a high bar in the realm of image generation, while Codex showcased its proficiency in generating high-quality code. Crucially, these models, accessible via API, have enabled developers to build a multitude of applications.

Furthermore, OpenAI has been innovative in contemplating the integration of these models into end-user experiences, as evidenced by the release of ChatGPT and the ChatGPT iOS mobile app. The company has also leveraged these platforms for gathering feedback and as a testing ground for many concepts (such as Plugins, Code Interpreter, etc.).

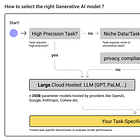

Today marked OpenAI's inaugural developer day, featuring several new announcements. The objective of this post is to reflect on these announcements and, more importantly, to discuss their implications for developers, as well as to explore the new types of applications that can now be built with greater ease.

TLDR.

💰📉 Cost Reduction: The new GPT-4 and GPT-3.5 Turbo models are more capable yet cost less. 🤯🤯.

📈🧠 Improved Model Capabilities: GPT-4 now includes a 128K token version (300 pages of text), features an updated knowledge cutoff (previously April 2021, now April 2023), and offers improved function calling.

🎛️🔧 Improved Model Control: The new model series can generate valid JSON-formatted responses using a `response_format` parameter and supports reproducible results through a seed parameter. Additionally, there is upcoming support for accessing log probabilities of generated tokens.

🤖🔗Agents: The Assistant API: This API supports the creation of agents that can utilize external knowledge (RAG), act via tools (e.g., code execution and function calling), and maintain infinitely long conversations through Threads. All of this in a unified api for building agents.

🤖🛍️Agents: GPTAgents and Agent Store: OpenAI will create a store where developers can bundle and share GPT agents with some revenue sharing. An Agent here is an LLM+Knowledge+Tools.

Cost Reduction

OpenAI has found a way to consistently optimize for cost reductions, and this is truly remarkable. I took the liberty of tracking the price of models over the last few months and it is quite interesting to see a reduction in costs for text generation models.

20x Reduction on LLM Pricing over the last 12 Months

From $0.02 for Davinci Models in March 2023 (the best model available via api at the time), to $0.001 for GPT3.5.-TURBO per 1k input tokens, this is a 20x decrease in price. OpenAI LLMs have come quite a long way.

Edit: Jan 24, 2024 . OpenAI reduces GPT3.5 Turbo to $0.0005

In general, the cost these models is driven by amount of queries that can be handled per unit hardware (throughput). Some thoughts on how OpenAI may have achieved this.

Smaller but More Capable Models.

In theory, if we have smaller models, we can fit more of these on our available GPUs and run queries in parallel. Recent research suggests carefully curated training data mixtures plus longer training, can enable smaller but more capable models. For example we see the impact of longer training in LLAMA2 and high quality data in Phi 1.5.Inference Optimization. This includes efforts in managing memory, quantization, dynamic batching of requests, distributed inference, low level GPU kernel optimizations etc. These approaches are implemented in libraries like DeepSpeed, NVIDIA TensorRT, CTranslate2, TGI, etc

It is reasonable that OpenAI has gotten really good at this over the last few years.Model Architecture/Computation: The attention layer is the main bottleneck in scaling transformer based models to longer sequences as runtime and memory increases quadratically with the sequence length. Advances such as Flash Attention and Flash Attention 2 (Dao 2023) propose optimizations (tiling, recomputation) to reduce memory requirements from quadratic to linear. In turn, this can lead to faster forward passes and increased throughput.

💡Implications: In addition to demonstrating best-in-class model performance, the reduced costs (and a trend towards future reductions in cost) makes building with OpenAI a winning proposition.

Improved Model Capabilities

OpenAI announced several enhancements to the core capabilities of the new GPT4 and GPT3.5 models released today.

Extended Context Length in GPT-4: GPT-4 now boasts a context window of 128,000 tokens, equivalent to approximately 300 pages of a standard book. This marks a significant increase compared to the previous limit of 32,000 tokens. They also mention the model has improved accuracy over longer contexts.

Enhanced Function Calling: Ability to call multiple functions within a single message. Users can now send a single request for multiple actions, such as "open the car window and turn off the A/C," which would have previously required multiple interactions with the model. Additionally, the accuracy of function calling has been improved, making GPT-4 Turbo more likely to return the correct function parameters.

Updated World Knowledge: GPT4 model knowledge cutoff is now April 2023. The model should be aware of world events up until this date.

Expanded Modalities in API: The API now includes DALL-E 3, GPT-4 Video, and Text-to-Speech (TTS) capabilities. On the TTS front, developers can generate human-quality speech from text using our text-to-speech API. The new TTS model features six preset voices and offers two model variants: tts-1, optimized for real-time use cases, and tts-1-hd, optimized for high quality.

💡Implications: General performance of applications should increase simply by switching to these models. Imagine your users/clients logging and summarization is better, idea generation is better, etc.

Improved Model Control

Model Output Control: The new models now support a JSON mode that ensures the model always generates valid JSON. You can set the response_format to { type: "json_object" } to enable JSON mode. When JSON mode is enabled, the model is constrained to generate only strings that parse into valid JSON. Additionally, log probabilities are returning to the API. This mode is particularly useful for developers who need to generate JSON in the Chat Completions API outside of function calling.

Reproducible Outputs and Log Probabilities: The new seed parameter enables reproducible outputs by making the model return consistent completions most of the time. This beta feature is useful for use cases such as replaying requests for debugging, writing more comprehensive unit tests, and generally having a higher degree of control over model behavior. Additionally, OpenAI is launching a feature to return the log probabilities for the most likely output tokens generated by GPT-4 Turbo and GPT-3.5 Turbo in the coming weeks.

💡Implications: Reliability is an important issue in developing with LLMs. In LIDA (a library for automated visualization), several stages in the pipeline require JSON formatted output. Being able to enforce JSON structured output is valuable in improving the reliability of the tool. Similarly a seed parameter can be helpful for reproducible experiments.

Agents: The Assistant API

The Assistants API is designed to help developers build powerful AI assistants capable of performing a variety of tasks.

Assistants can call OpenAI’s models with specific instructions to tune their personality and capabilities.

Assistants can access multiple tools in parallel. These can be both OpenAI-hosted tools — like Code interpreter and Knowledge retrieval — or tools you build / host (via Function calling).

Assistants can access persistent Threads. Threads simplify AI application development by storing message history and truncating it when the conversation gets too long for the model’s context length. You create a Thread once, and simply append Messages to it as your users reply.

Assistants can access Files in several formats — either as part of their creation or as part of Threads between Assistants and users. When using tools, Assistants can also create files (e.g., images, spreadsheets, etc) and cite files they reference in the Messages they create.

💡Implications: So much to unpack here. This simplifies the process of building agents that can act via tools (code interpreter, and knowledge retrieval). Not having to implement your own sandbox environment for code execution (assuming your use case requires this) is a win. Also, this *could replace* your RAG implementation details and can simplify the creation of prototypes that require knowledge retrieval (you do not have to implement your own vector database). Finally, with Threads, OpenAI takes on the task of managing long running conversations as well as managing context length issues (which every developer runs into at some point).

Ofcourse, there are limitations associated handing over your data processing pipeline to the OpenAI api - lack of control e.g., fine grained embeddings/retrieval, integrating with your current data sources at scale (e.g., millions of documents), privacy restrictions, and costs (you get charged by file size etc). It is unclear that this model will scale beyond simple use cases.

Agents: GPTAgents and Agent Store

GPT Agents : OpenAI proposed the concept of GPTs - customized version of chatgpt , instructions expanded knowledge (e.g., retrieval augmentation based on files uploaded) and actions (e.g., the ability to use Code Interpreter to write and compile code). The idea is to slowly build to agents that can act on behalf of users to solve a task. For example, you can build a GPT to you learn the rules to any board game, help teach your kids math, or design stickers. Once these are created, they can be shared for others to use.

GPT Store: The GPT Store showcases creations by verified builders. Once featured in the store, GPTs become searchable and have the potential to rise in the leaderboards. OpenAI will spotlight the most useful and delightful GPTs encountered, categorizing them under productivity, education, and "just for fun."

In the upcoming months, creators will have the opportunity to earn money based on the usage of their GPT by others.

Other Announcements

White Glove Custom Model Service: OpenAI is offering a White Glove Custom Model service, which will enable organizations to train a model on a new knowledge domain using proprietary data. Our researchers will collaborate with clients, providing domain-specific training and post-training support. This comprehensive service will modify every step of the model training process, including additional domain-specific pre-training and a custom reinforcement learning post-training process tailored to the specific domain. Organizations that opt for this service will have exclusive access to their custom models. Due to the intensive nature of this service, it will initially be very limited and priced accordingly. Interested organizations can apply here.

GPT Builder: The devday keynote showed GPT Builder, a user-friendly interface that allows users to create GPT models using natural language instructions.

Copyright Shield: OpenAI will step in and defend customers, and pay the costs incurred, if they face legal claims around copyright infringement related to using OpenAI products. This applies to generally available features of ChatGPT Enterprise and the developer platform.

Conclusion

As a developer, I find the recent announcements here very exciting. Cost reductions could make these models more practical to use. The Assistant API facilitates prototyping complex agent workflows, eliminating the extensive infrastructure work that was previously burdensome, such as implementing a RAG workflow, managing long conversation contexts, and executing code.

Moreover, the capability to generate output constrained to a valid JSON format, the option to set a seed for reproducibility, and access to log probabilities are significant steps toward addressing reliability issues with large language models (LLMs).

While some of the ideas introduced may not be entirely new, they certainly represent significant quality-of-life improvements for engineers attempting to build Generative AI apps.

References

New models and developer products announced at DevDay . https://openai.com/blog/new-models-and-developer-products-announced-at-devday

Introducing GPTs .

https://openai.com/blog/introducing-gpts

Really great article!

Interesting stuff about strides OpenAI is making in generative AI