4 UX Design Principles for Autonomous Multi-Agent AI Systems

#42 | A summary from a keynote talk I gave at the 2025 AI.Engineer World's Fair Conference.

I gave a keynote talk at the 2025 AI.Engineer conference - “UX Design Principles for Semi-Autonomous Multi-Agent Systems”

It was a short talk and my goal was to distill a set of UX design principles that developers could apply while building experiences for autonomous multi-agent systems. It is largely based on my experience prototyping multi-agent systems, maintaining AutoGen (a framework for building multi-agent apps), as well as building and maintaining AutoGen Studio - a low code interface for prototyping multi-agent applications.

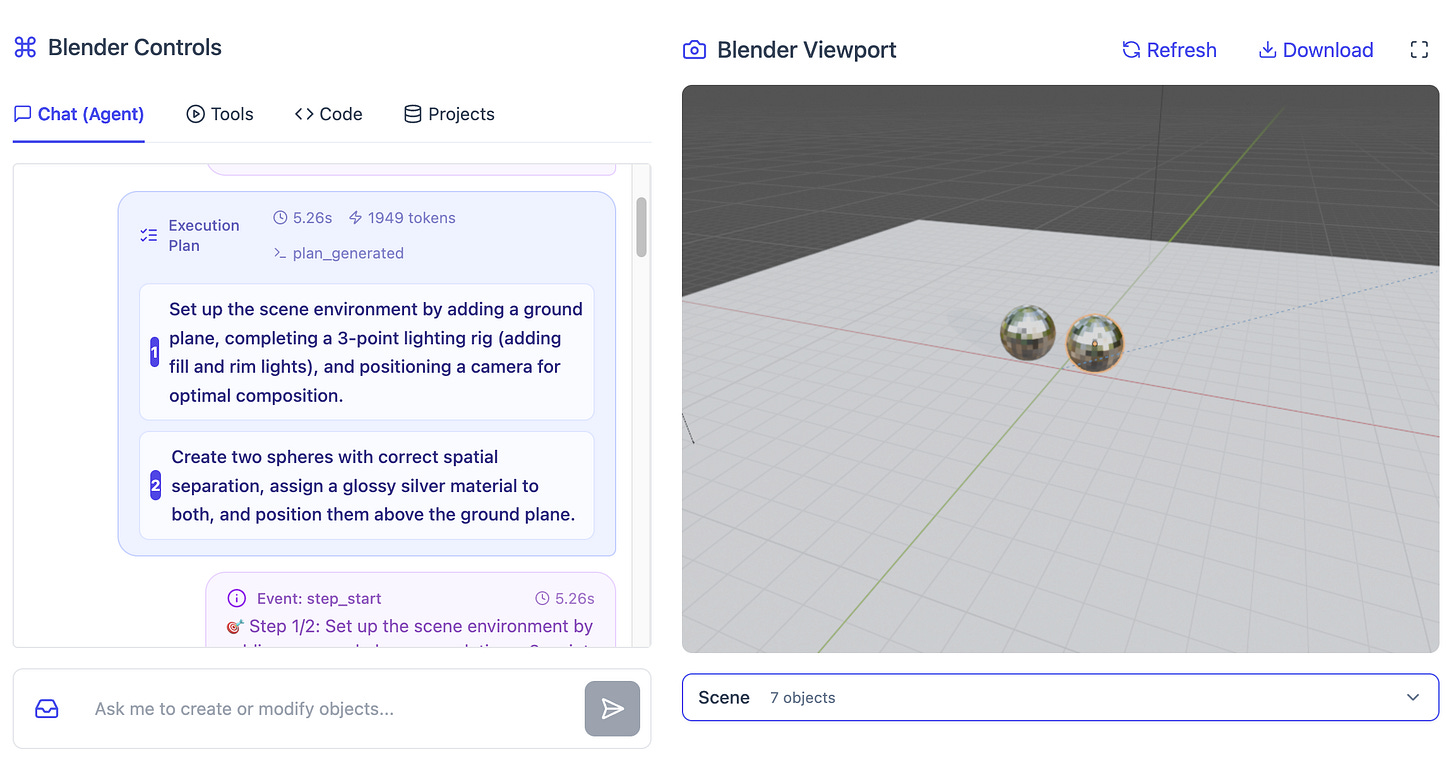

Also, to illustrate these ideas, I built a multi-agent system from scratch (no frameworks, just pure Python and OpenAI calls) - BlenderLM. BlenderLM is a multi-agent system that can take a natural language query, and drive the Blender 3D creation tool (via its Python api) in addressing these tasks.

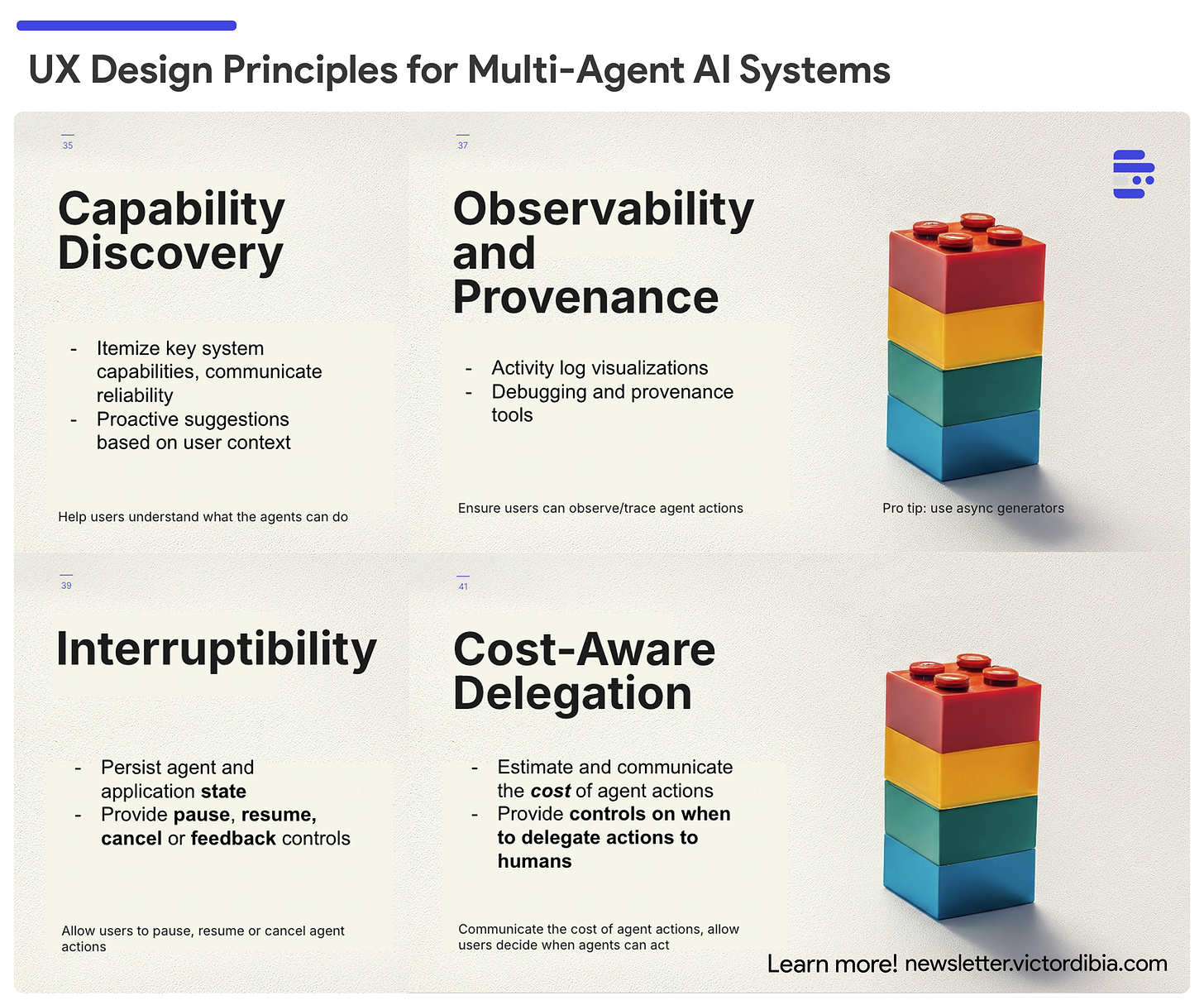

TLDR on the principles I recommended;

- Capability discovery (Help users understand what the agents can do)

- Observability and provenance (Ensure users can observe/trace agent actions)

- Interruptibility (Allow users to pause, resume or cancel agent actions)

- Cost-Aware delegation (Communicate the cost of agent actions, allow users decide when agents can act)

Beyond Workflows to Multi-Agent Systems

I wrote in a 2024 AI Agent rewind that most “agents” in production are mostly workflows - a set of deterministic steps designed by an engineer with a few LLM calls sprinkled in. While this provides reliability and is indeed the right approach to solve many tasks; they still require that the developer knows the exact solution to the problem and can express that in the outlined steps.

On the other hand, Multi-Agent Systems where LLMs drive control flow of execution, are suited to problem spaces where the exact solution is unknown. Software that can take actions, observe results, and interactively explore solution spaces - but it also introduces new challenges around reliability, user trust, and system comprehensibility. Some common use cases that are seeing benefits from a MAS approach includes software engineering, back office tasks, deep research.

The UX principles discussed here apply to these types of systems - systems with autonomy, ability to act on complex long-running tasks.

IMO there are properties of MAS to keep in mind that often necessitate careful UX design considerations:

Autonomy | Can do many different things

Action | Can take action with side effects

Duration | Complex long- running tasks

Building A Multi-Agent System From Scratch

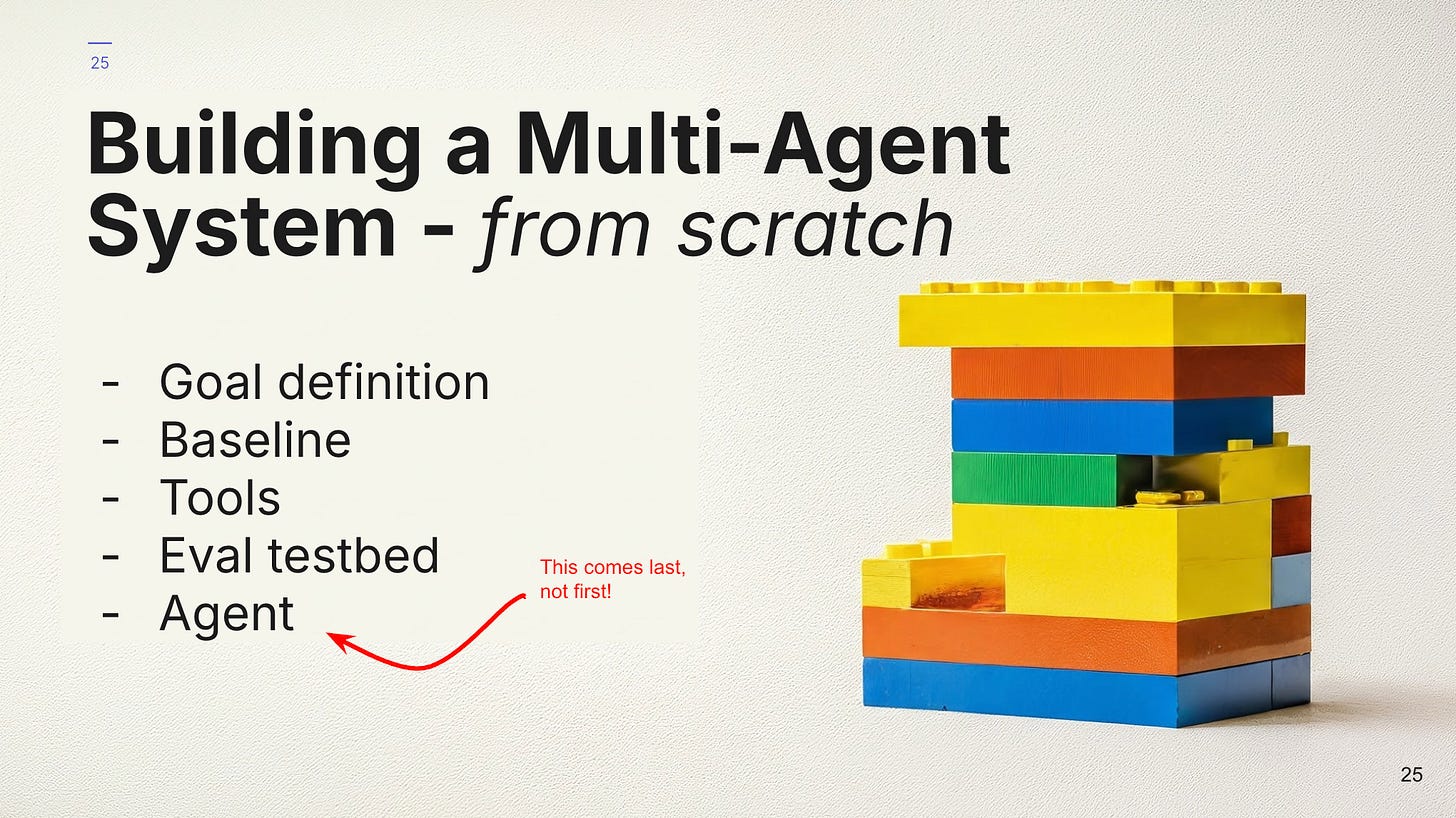

I often see developers start the agent development process by immediately attempting to code up an Agent class or some agentic behaviors- e.g., selecting the prompt/model, fretting over concepts like memory etc. This is often a mistake.

Instead, if we take a step back from the current agent hype, it’ll become clear that a more typical software development life cycle approach still applies. I tend to follow a 5-stage process :

Goal definition (what are we building)

Baseline (what is a non-agentic baseline)

Tools (Architect, build and test the tools that the agent will use. You should spend the most time here)

Eval testbed (Interactive e.g., Jupyter notebook, UI or offline benchmark for evaluating systems)

Agent (Only work on this after steps 1-4 above - begin with a tight loop of an LLM calling tools and optimize e.g., add more agents and evaluate)

Also about 70% of effort should be split between step 3 and 4 - across building your tools and tuning your evaluation harness.

One of the reasons for building a demo from scratch for this talk was to enable me to critically think through my process, as well as validate many of the UX principles I have followed while building and maintaining AutoGen Studio. I built BlenderLM - a sample tool that can take natural language tasks e.g, “create a scene with two glossy balls” and uses an agentic system to drive the underlying Blender Python api to accomplish the task.

TLDR; BlenderLM consists of 3 agents. It includes a React UI where the user can initiate tasks that kick off an agent run with bidirectional communication over a web socket.

Main /Orchestrator Agent. It has access to a set of task-specific (e.g, create blender object) and general purpose (code execution) tools, and can call other agents in a loop to address a task.

Planner Agent: Takes the task and system state and generates a plan to address the task

Verifier Agent: Takes a task and system state and generates an assessment on if the task is accomplished. This is used with some retry logic to determine when steps in a task are accomplished.

The details of BlenderLM will probably be a separate post!

Design Principles

The 4 principles below are not exhaustive, but have pretty high coverage. Multi-agent systems are still early and it is likely the ideas below will be revised as the space evolves.

Capability Discovery

Help users understand what the agents can do

Agents have autonomy. This implies that they can do many things. In reality, each agent has specific configurations—system prompts, available tools, and internal logic—that make it more reliable at certain types of tasks compared to others. But the user does not know this. This creates a discovery problem: users don't know which tasks will work well and which might fail, leading to frustration and mistrust when the agent underperforms on unsuitable tasks.

This principle advocates that the UX nudges the user towards high reliability task examples or even proactively suggests relevant high reliability tasks given the user’s context and the system’s capabilities.

Many tools already do this well by having a set of sample tasks as presets that the user can select to get started.

Observability and provenance

Ensure users can observe/trace agent actions

Autonomous agents can explore trajectories that are non-deterministic and only known at run time. Each run with the same input can lead to significantly different trajectories. This makes it important for the end user to observe these trajectories both to build trust that the agent is doing the right thing as well as to learn more about its capabilities and limitations, and improve their task formulation approach.

In BlenderLM, this is implemented by streaming all updates the agents explore - structured plans, the number of steps, current steps, and where appropriate showing duration and cost (LLM tokens) etc. Tools like Agent mode in VS Code/Cursor/WindSurf also do this well by streaming the actions as agents make progress.

Interruptibility

Allow users to pause, resume or cancel agent actions

Autonomous multi-agent systems can run for extended periods, make multiple tool calls, and take actions with real-world resource implications. In some cases, particularly in human-in-the-loop settings, users may observe an expensive or problematic operation trajectory and need to pause the system to provide feedback, course-correct, or cancel entirely.

This principle advocates for designing systems where users can interrupt agent execution at any point, pause long-running tasks, and resume from where they left off without losing progress or system state.

BlenderLM implements this with pause/stop controls that allow users to halt execution at any point during a task.

Cost Aware delegation

Quantify and communicate the cost of agent actions, allow users to decide when agents can act.

Agents can act, and these actions can have side effects that range from benign (executing code to add a cone to a 3D scene) to catastrophic (executing code that deletes critical system files). Some actions are reversible and can be rolled back, while others cannot e.g., once an email is sent to the CEO, there's no taking it back. To an agent, the costs, risks, and safety implications associated with each action may not be immediately apparent without specific design.

This principle advocates for implementing specialized "risk/cost classifiers" that can assess and return risk levels such as low/medium/high. In turn, the UX should then allow users to control and configure response behavior—for example, automatically allowing all low-risk actions while requiring explicit approval for medium and high-risk operations.

Key Takeaways

A few high-level takeaways.

Perhaps the first and most important one is to know when to use an autonomous multi-agent approach. I typically offer a complex task perspective i.e., does the task benefit from planning, can steps be decomposed into components that benefit from specialization, does it require processing extensive context that can be siloed to specific agents, and is the environment dynamic (i.e., we need to constantly sample to understand impact of actions).

Takeaway summary.

Know when to use multi-agent (most tasks don't need it)

Build eval-driven : (define success metrics first)

Design human-centered (capability discovery, observability, interruptibility, cost-awareness)

Start simple (baseline → tools → agents → polish)

Most of the material here is adapted from Chapter 3 of my upcoming book on building multi-agent systems.

Acknowledgement: The ideas here have benefited from conversations with fellow contributors to AutoGen at Microsoft and the broader OSS community.