10 Ways to Critically Evaluate and Select the Right Multi-Agent Framework

#36 | What makes a multi-agent framework good or useful?

In a world crowded with dozens of multi-agent frameworks, a critical question for engineers, consultants or teams looking to build agentic AI applications is:

"What framework should I use?"

Other versions of essentially the same question include "Should I use LangGraph vs CrewAI vs AutoGen vs OpenAI agents vs …. ?", “Which agentic framework is the best?”

Overall, I find that static direct comparisons have limited value - all frameworks are constantly evolving (and even converging to similar apis).

More importantly, I believe it's more valuable to offer guidance on how to think about comparing frameworks, so you can choose one that best supports your specific business goals.

Why am I Qualified to Write/Reflect on This?

I am a core contributor to AutoGen, a leading framework for multi-agent applications where I've helped design aspects of the library, especially in the v0.4 release. Through this work, I've had the opportunity to discuss use cases with hundreds of developers—ranging from hobbyists to engineers working on serious production implementations. This background inevitably shapes (perhaps biases?) the perspectives I've shared below.Additionally, my academic background in HCI (human-computer interaction)—focusing on user behavior, psychology, and applying these insights to build better interfaces—informs my approach. You'll likely notice this perspective in my reflections on developer experience throughout this post.

In this article, my main argument is that the right way to evaluate frameworks is by considering what they should help you accomplish. Rather than getting lost in feature-by-feature comparisons that quickly become outdated, let's focus on the fundamental capabilities that make a multi-agent framework valuable.

Here are 10 things a multi-agent framework should do well (and reasons you should consider adopting one):

For each dimension, I offer concrete evaluation questions you can use to evaluate a framework, and code samples with documentation links of good implementation where possible.

The TLDR; Here are 10 dimensions to help evaluate and select the right multi-agent framework;

Intuitive developer experience with both high-level APIs and low-level controls

Async-first design supporting non-blocking operations and streaming

Event-driven architecture for scalable agent communication

Seamless state management for agent persistence across sessions

Declarative agent specifications for serialization and sharing

Integrated debugging and evaluation tools for rapid iteration

Support for diverse agentic collaboration patterns

Enterprise-grade observability with built-in monitoring

Streamlined production deployment capabilities

Active community with strong documentation and growing ecosystem

I will reference AutoGen across this post. If you are new to the framework, I wrote a friendly introduction covering how it works.

1 . Developer Experience

Low floors high ceilings

A good framework should be approachable but also flexible. You should be able to express core ideas very fast, while maintaining the flexibility to implement highly specialized use cases. Think of frameworks like TensorFlow, where the Keras API provides a simplified Sequential API, a Functional API, and a low-level core API for custom layers and models.

AutoGen excels here with its low-level Core API and higher-level AgentChat API.

What does an approachable interface look like? It should let you intuitively express the core components of an agent - LLM, tools, memory, and how multiple agents interact as teams toward a goal/task (flow control, task management).

In my opinion, AutoGen is a leader here. An agent can be defined with clean, expressive code in the high level AgentChat api:

...

agent = AssistantAgent("assistant", model_client=model_client, tools=[calculator])

...The flow of control across agents can be defined with high-level team presets that orchestrate how agents collaborate.

team = RoundRobinGroupChat([agent1, agent2], termination_condition=termination)A byproduct of the high-level API's simplicity is that you can replicate complex behaviors through composition with minimal code. For example you can replicate the OpenAI operator agent in 40 lines of code,

Additionally, AutoGen provides low-level control where an agent is any entity that can receive and publish messages. This implements the actor model, allowing you to compose simple message passing into complex distributed multi-agent behaviors.

Does it provide a high level Agent abstraction with the ability attach any LLM/model, tools, memory components?

Does it provide a low level api for custom agents and complex orchestration behaviour?

2. Asynchronous API

Many times your agent will explore tasks that are blocking (e.g., file I/O), take many steps, or are long-lived. Having an async design creates space to do things like stream intermediate updates, yield resources, and integrate with other modern tech stacks.

It's possible to make an async framework behave synchronously. Going the other direction is much harder.

AutoGen is async-first by design (the original v0.2 version was not, which led to a poor developer experience). This makes building UI applications straightforward with easy integrations for tools like Chainlit and Streamlit.

The async design enables:

Streaming partial results while agents are working

Handling long-running tasks without blocking

Supporting cancellation and human-in-the-loop feedback

Efficiently managing multiple simultaneous agent teams

Seamlessly integrating with modern async web frameworks

This approach aligns with modern architecture principles, making AutoGen well-suited for production environments where responsiveness and scalability matter.

await team.run(task="Write a short poem about the fall season.")

print(result)

Is the framework async first?

3. Event-Driven Communication (Distributed Agents, Runtime)

A robust multi-agent framework should separate messaging patterns from deployment models, allowing for flexibility as applications scale.

A good framework should accommodate this.

AutoGen achieves this through two key components:

First, an event-driven messaging architecture based on the actor model, where agents communicate solely through message passing. This approach enables agents to:

Operate independently with encapsulated state

Communicate asynchronously

Scale horizontally as needed

Isolate failures to individual components

# see docs

response = await agent.on_messages(

[TextMessage(content="Find information on AutoGen", source="user")],

cancellation_token=CancellationToken(),

)Second, a runtime abstraction layer that accommodates different deployment scenarios:

SingleThreadedRuntime - suitable for single-process applications where all agents are implemented in the same programming language and running in the same process.

DistributedRuntime - suitable for multi-process applications where agents may be implemented in different programming languages and running on different machines.

This separation means developers can start with a local implementation and later scale to distributed systems without changing application code. The architecture supports:

Independent scaling of resource-intensive agents

Cross-organizational deployment with security boundaries

Hardware isolation for enhanced security

Cross-language agent implementations

This flexibility is particularly valuable for enterprise deployments where security, scalability, and organizational boundaries are critical considerations.

Is the framework event-driven at its core?

Does it provide a runtime abstraction that supports both local and distributed deployments?

4. Save and Load State (Resume Agents)

Also known as Agent Checkpointing, this feature is essential for practical deployment. You want agents that you can easily spin down and spin up. Spin down = save state, spin up = load state.

AutoGen provides clean abstractions for saving and loading the state of agents and entire teams. This capability enables several key workflows:

Pausing long-running tasks and resuming them later

Maintaining conversation context across application restarts

Scaling agent resources up or down based on demand

Supporting web applications with stateless API endpoints

Creating snapshot points for testing or debugging

Enabling disaster recovery for mission-critical agent deployments

With AutoGen, checkpointing agent state is as straightforward as:

# save state, docs

agent_state = await agent.save_state()

team_state = await team.save_state()

# load state

await agent.load_state()

await team.load_state()The framework makes this straightforward with intuitive save_state() and load_state() methods that work across individual agents and teams. The serialized state can be stored in files or databases according to your application needs.

Can you checkpoint and resume an agent or entire workflow (consisting of multiple agents)

5. Declarative Agents (Specifications)

Developers should be able to represent agents as declarative specifications that can be used to instantiate, serialize, version and share them. All good frameworks should support this functionality.

AutoGen's component specification system allows all core components—agents, teams, tools, and models—to be represented as serializable JSON.

team_config = team.dump_component() # serialize

new_team = RoundRobinGroupChat.load_component() # instantiate objectThis enables:

Version control for agent configurations

Sharing agent designs across projects

Simplified deployment across environments

Integration with visual builders like AutoGen Studio

In my 2025 agent predictions, I suggest this approach will likely enable automated multi-agent systems where task-specific specifications are generated (possibly guided by RL) that outperform manually defined agent teams.

Can agents be serialized to a declarative format like JSON and runtime objects reconstructed from them

6. Debugging/Prototyping/Evaluation Tools

Tools for rapid prototyping and feedback are essential for developers. Autonomous multi-agent systems have exponentially complex configurations, and most software engineers are unfamiliar with this level of complexity. Evaluating these systems requires creativity—designing baselines, comparing configurations, conducting experiments, and interpreting results.

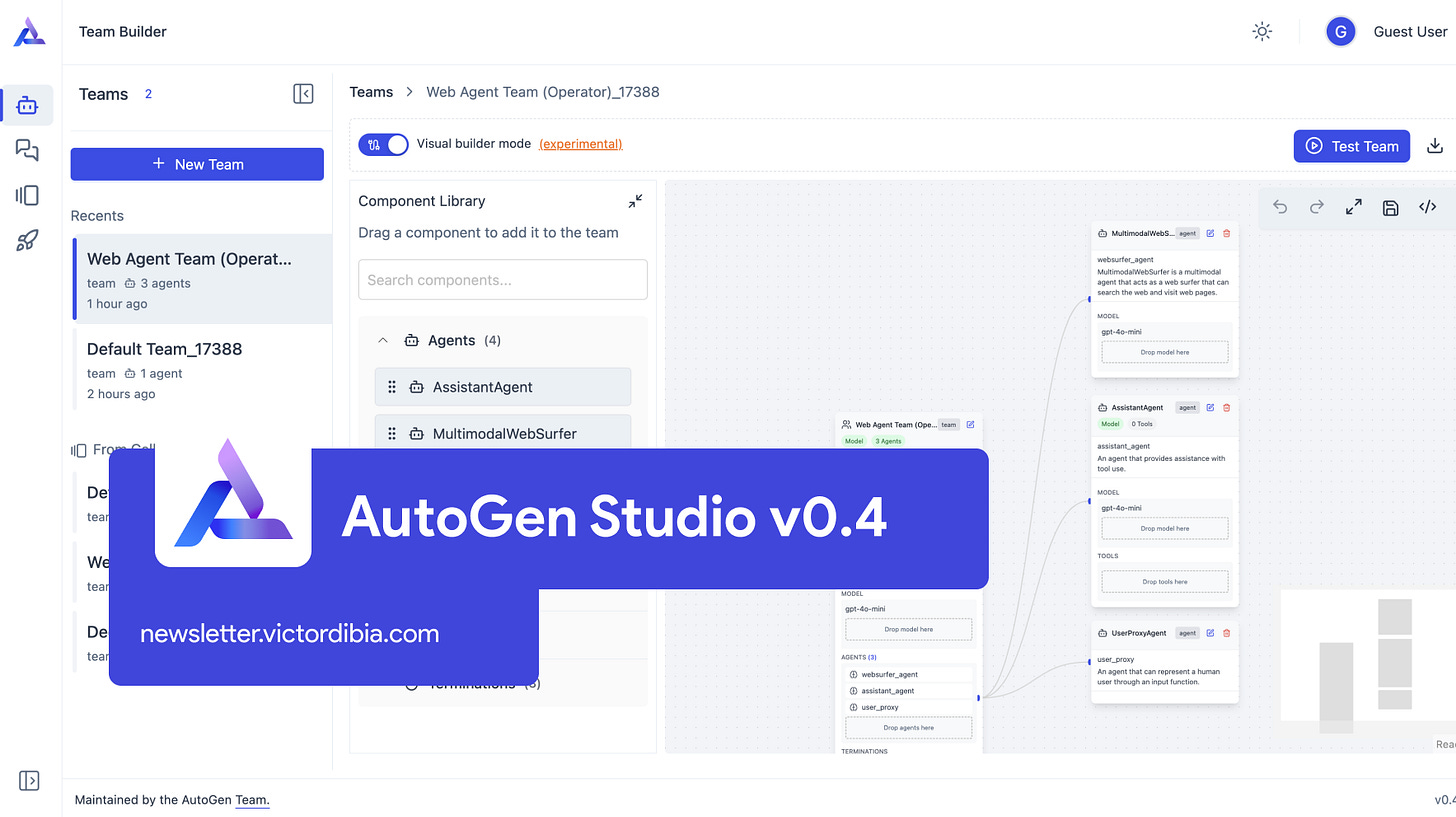

AutoGen Studio provides a visual team builder where you can drag and drop components from a library to create agent teams. You can test these teams directly in the interface, viewing the real-time flow of messages between agents, and monitor metrics like token usage and execution time.

autogenstudio uiKey capabilities include:

Visualizing message flows between agents

Tracking tool usage and performance metrics

Comparing different team configurations

Testing systems without writing code

Streaming agent "inner monologue" during execution

Exporting tested configurations to production code

These tools reduce the time from concept to working system, helping developers iterate quickly on agent designs while maintaining visibility into the complex interactions occurring during execution.

Does the framework provide a UI interface rapidly modify prototype teams, reuse existing components, inspect agent runtime behaviors

Does the framework provide evaluation tools (interactive or offline/batch) to compare multiple multi-agent configurations

7. Support for a Wide Range of Agentic/Multi-Agent Patterns

A good framework should provide ready-made presets that correspond to established (or emerging) patterns, making it easier to replicate proven ideas with minimal error.