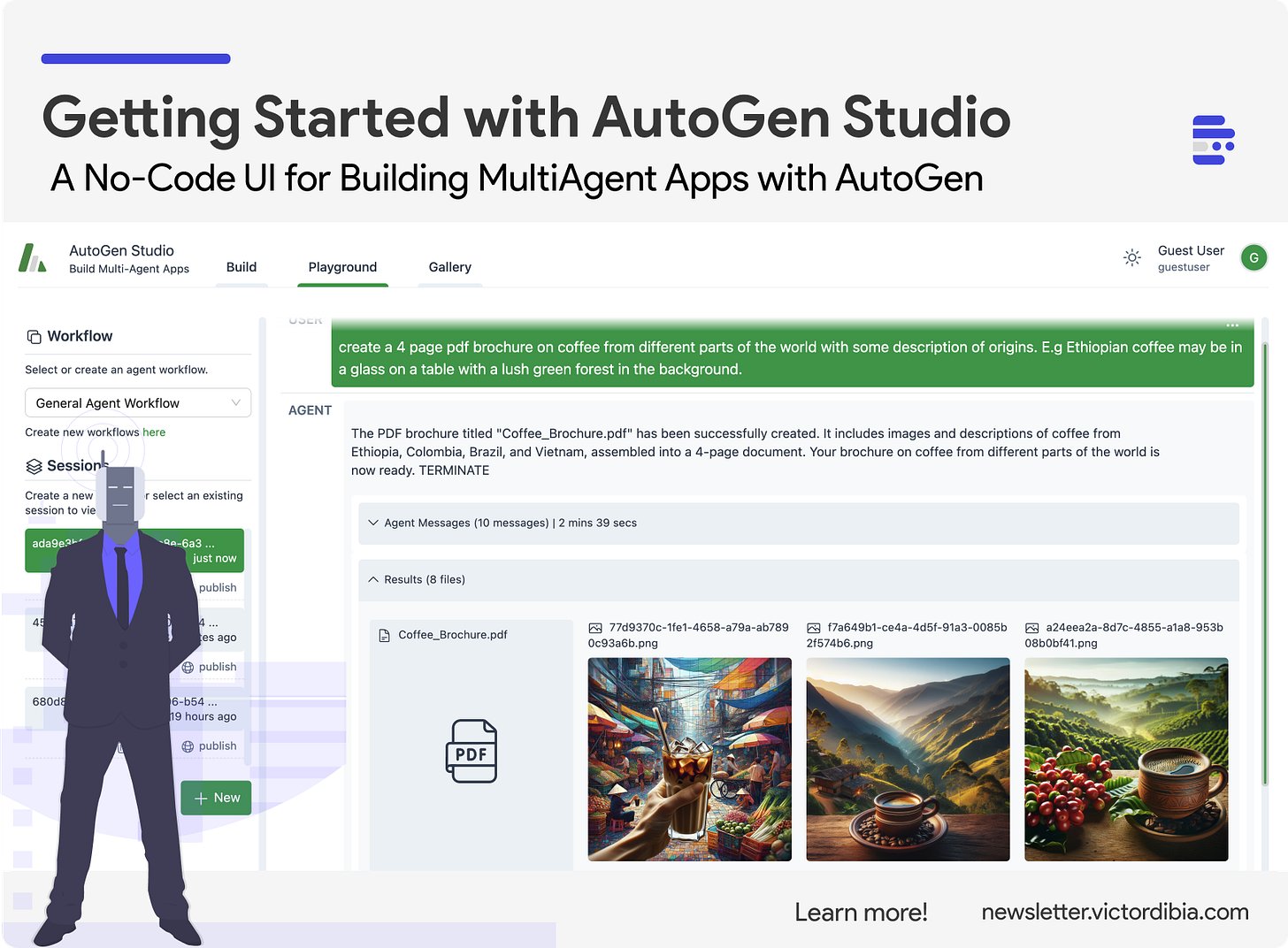

AutoGen Studio - A No-Code User Interface for Building and Debugging Multi-Agent Systems

Issue #18 | Build Multi-Agent Apps with AutoGen, Write Zero Code

AutoGen Studio is an OSS no-code interface designed to enable rapid prototyping of multi-agent applications using AutoGen. It also serves as an example on how to explore building out the UI/UX of multi-agent applications.

Read the AutoGen Studio paper here.

Its short and has more details on the current state of the autogenstudio design.

This post aims to serve as a guide to the tool and to address frequently asked questions. (I am the lead developer of AutoGen Studio, so feedback is welcome!).

Note: This article will be updated regularly, as AutoGen Studio is under active development. As such, in its current form, it is not a production ready tool. Some design goals mentioned may not be immediately reflected in the current implementation (this will be noted where applicable). The primary objective is to offer additional documentation for early adopters.

Note: AutoGen studio only supports a subset of features offered by the core AutoGen API.

This post references general terms such as multi-agent systems, models, agents, workflows, and AutoGen-specific abstractions like userProxyAgent, AssistantAgent, and GroupChat. The reader is encouraged to read the following previous articles to get some background - An overview of multi-agent systems and an Getting Started with AutoGen.

TLDR;

This post covers:

Installation - AutoGen Studio is OSS and full source code is here.

Entities and Concepts in AutoGen Studio (skills, models, agents, workflows)

System Design - UI Frontend, WebAPI, Workflow Manager

When to use AutoGen Studio vs AutoGen python framework.

Conclusions and FAQ

Getting Started - Installation

AutoGen Studio can be installed from pypi. I typically suggest using a python virtual environment such as miniconda (learn why here).

Install a virtual environment like miniconda. An install guide for linux, windows, mac here.

Activate your virtual environment e.g. conda activate autogenenv

Install AutoGen Studio -

pip install -U autogenstudio

Note that the source code for autogenstudio (frontend and backend files) are also available - in the event that you would like to make modifications.

Launching the UI

autogenstudio ui --port 8081 --appdir autogenThis launches the autogenstudio ui on port 8081 and creates a folder in the current directory named autogen to store files (e.g., generated images, database etc). More on the UI is shown below:

Using AutoGen Studio

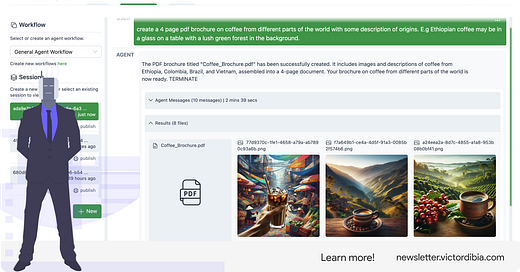

The expected user experience is as follows (shown in the short video above):

Build: Users begin by constructing their workflows. They may incorporate previously developed skills/models into agents within the workflow.

Playground: Users can start a new session, select a workflow, and engage in a "chat" with this agent workflow. It is important to note the significant differences between a traditional chat with a Large Language Model (LLM) and a chat with a group of agents. In the former, the response is typically a single formatted reply, while in the latter, it consists of a history of conversations among the agents.

Stateless API

Each request from the user interface (UI) is structured to be stateless, meaning that the backend API does not retain any in-memory state information from one request to the next. Consequently, all essential parameters required to complete a task, including the history of previous messages and the configuration of the agent workflow, are passed to the api (configuration) or retrieved from a database (e.g., history). Agents are dynamically created to handle the task at hand. For additional details on the generation of AutoGen agents from workflows, please see the WorkflowManager section below.

Design Goals - Why No Code?

The AutoGen library provides a flexible interface for prototyping multi-agent applications. The building blocks and abstractions it provides (e.g., the ConversableAgent class) can be configured into a wide range of workflows for solving tasks in python scripts or notebooks. AutoGen Studio is built to complement this experience in 3 main ways - support rapid prototyping via a UI, provide developer tooling, and enable community sharing.

Who is AutoGen Studio For?

While it provides a UI interface, AutoGen Studio is still primarily a developer tool, to enable rapid prototyping, debugging and sharing.

Rapid Prototyping

The goal here is to provide a playground experience where developers can rapidly test hypotheses e.g., how will my agents behave if I used a given configuration (model, skills, agents etc). This includes the ability to modify the parameters of their agents and run them against tasks and visualize resulting artifacts (e.g., images, code, csv and other file types generated).

Developer Tooling.

AutoGen studio is also designed to gradually include tools that make it easy to visualize, observe and debug agent behaviors. Multi-agent systems are akin to complex systems, with behaviors that are inherently difficult to model due to the various interactions among their components or between the system and its environment. Given this complexity, it is useful to offer visual tools and interfaces that assist developers in making sense of how inputs to the system drive outcomes and results.

As a starting point, AutoGen Studio renders the "inner monologue" of agents, providing information on the number of messages exchanged and the cost associated with each task executed (# of turns, time and $ cost where available). Future tools will explore features like agent profiling (assigning tags to each action an agent takes) and visualizing metrics such as efficiency (if the agent action resulted in overall progress).

Community and Sharing

The AutoGen ecosystem is quite vibrant, with many contributions in terms of examples and code (PRs). AutoGen studio also aims to foster sharing of these artifacts towards developing shared standards and best practices for addressing tasks with multiple agents. The current implementation supports downloading skills, agent and workflow configurations that can be shared and imported.

Entities and Concepts

AutoGen adopts a declarative approach for specifying AutoGen agents workflows in a pure JSON DSL. This makes it possible to create these configurations in a UI (that maps to the JSON).

A workflow contains a few entities that are worth describing in detail.

Skills

A skill configuration primarily is a python function a that describe how to solve a task. In general, a good skill has a descriptive name (e.g. generate_images), extensive docstrings and good defaults (e.g., writing out files to disk for persistence and reuse). Skills can be attached to agent specifications.

Models

A model refers to the configuration of an LLM. Similar to skills, a model can be attache to an agent specification. AutoGen used the openai library as the common interface for making calls to an LLM and the interface implements properties required to call an openai compliant api (model, api_key, base_url, api_version etc).

Agents

An agent configuration declaratively specifies properties for an AutoGen agent (mirrors most but not all of the members of a base AutoGen Conversable agent class). Agents can be added to a workflow.

Workflows

An agent workflow is a specification of a set of agents (team of agents) that can work together to accomplish a task. AutoGen studio structures a workflow with a sender and receiver abstraction where the sender simply is the agent that calls an `initiate_chat` call with the receiver as the target agent. The sender/receiver are ConversableAgents or their derivations (currently, only UserProxy, AssistantAgent, GroupChat types are supported).

System Design

AutoGen studio implementation can be divided into 4 high level components - the frontend UI, the backend web api, a workflow manager and database manager.

User Interface

The user interface for AutoGen studio has three high level sections - build, playground and gallery. It is built with React (Gatsby).

Build: The build sections offers an interface to specify properties of agent workflows that can be used to address tasks. This is broken down across 4 high level primitives (discussed above) - skills, models, agents and workflows.

Playground: The playground section provides an interface to “chat” with an agent workflow. Each chat is organized into a session with history maintained per session.

A session can be “published” to a gallery.Gallery: The gallery (currently implemented as a local gallery) provides a view of the chat history associated with a published session.

Web API (Backend API)

AutoGen studio implements a backend api implemented using FastAPI. This mainly provides a set of endpoints that provide functionality for the frontend UI. This includes database CRUD operations for entities listed above, sessions and gallery items.

Workflow Manager

The Workflow Manager provides an API to execute a task against a specified workflow configuration. It accomplishes this by converting the workflow specification into actual AutoGen agent classes and then invoking the `initiate_chat` method from the designated `sender` to the `receiver`.

The Workflow Manager accepts two critical parameters: `config`, which is the Workflow Configuration, and `history`, a list of messages that represent prior communications with the agent. It features a public `init` method that is executed upon the creation of a Workflow Manager object and a `run` method that can be invoked to execute a task within the workflow.

The `init` method operates as follows:

Load Agents: The sender and receiver are loaded as AutoGen agents.

Load Skills: As agents are loaded, if skills are attached to them, the following steps are taken:

Concatenate all skills and write them to a `skills.py` file in the agent's working directory.

Create a skill prompt that includes all skills, concatenated, with instructions to the agent as follows: "The following functions are available in a file named `skills.py`. These functions can be used while generating code by importing the function name from `skills.py`."

Add the skill prompt to the agent's system message.

Load History: If a history object is provided, the Workflow Manager attempts to repopulate the history for both the sender and receiver by triggering silent send events on these agents. This involves adding messages with the role of 'user' to the sender's history by calling `sender.send(receiver, request_reply=False)` and messages with the role of 'assistant' to the shared history by calling `receiver.send(sender, request_reply=False)`.

Since skills are incorporated into the agent’s system message, the agent is made aware of these skills and can utilize them by importing from `skills.py`.

Finally, the `run` method takes a `message` argument and initiates a conversation between agents by calling `sender.initiate_chat(receiver, message ...)`.

Whenever the user enters text into the UI (e.g., plot the stock price of NVIDIA today), the associated workflow configuration is used to instantiate a WorkflowManager instance, the `run` method is called and the result is returned to the UI.

Database

All of the entities in the build section of the UI are persisted to a database (sqlite). The database is also used to implement sessions - each session is made up of a list of messages with a session id. In the UI, to resume a session, we retrieve all messages with that session and append as history to the `run` method.

Message history is updated for each session when:

The user sends a message sent from the UI.

An agent workflow returns a “final response” in response to the user message. Note that the final response is determined by the `summary_method` field for a workflow configuration. Supported options include

last: The default is `last` which saves the last message in the agent conversation. The drawback of `last` is that if the last message does not contain the actual answer, then that information is not committed to history.

llm: This usesan LLM to summarize the agent conversation before it is added to history (recommended).

none: No final message is created.

AutoGen Studio vs AutoGen - When to Use What?

AutoGen studio builds firmly on top of AutoGen but also implements a limited set of abstractions primarily towards the goal of supporting rapid prototyping via a UI interface. The best place to understand these abstractions is to review the WorkflowManager class that implements how agent configurations are run against user tasks in AutoGen Studio. As a developer, understanding what these abstractions are can be useful in deciding whether to build with autogenstudio or write your own agent logic directly with autogen classes.

Important current limitations/differences of autogenstudio today include:

Support for only two agent and GroupChat workflows. Other types of agents are not currently supported.

Support for only serializable properties of the conversable agent class. Given that agent configurations are serialized to json text (from UI), only these types of fields are exposed. For fields that are objects or callables (e.g. termination_msg), autogenstudio explores a fixed “best effort” implementation (e.g., a fixed is_termination_msg logic is used). See WorkflowManager for more details. This also applies to custom behaviour like registering custom reply functions, using hooks, etc.

Currently only supports model endpoints that are openai complaint.

AutoGen studio also introduces new abstractions to support implementation. It introduces data models to existing entitles in AutoGen with additional fields (e.g., AgentFlowSpec) and introduces some new entities (AgentWorkflowSpec) e.g.,

AgentFlowSpec represents agents. It has a config field that mirrors serializable properties in a classic AutoGen agent class. It also has additional fields such as skills to enable the exact type of implementation used in the Studio.

class AgentFlowSpec: """Data model to help flow load agents from config""" type: Literal["assistant", "userproxy"] config: AgentConfig id: Optional[str] = None timestamp: Optional[str] = None user_id: Optional[str] = None skills: Optional[Union[None, List[Skill]]] = None

AgentWorkFlowConfig. represents agent workflows and introduces concepts such as a sender and receiver (which are also agents).

@dataclass class AgentWorkFlowConfig: """Data model for Flow Config for AutoGen""" name: str description: str sender: AgentFlowSpec receiver: Union[AgentFlowSpec, GroupChatFlowSpec] type: Literal["twoagents", "groupchat"] = "twoagents" id: Optional[str] = None user_id: Optional[str] = None timestamp: Optional[str] = None # how the agent message summary is generated. last: only last message is used, none: no summary, llm: use llm to generate summary summary_method: Optional[Literal["last", "none", "llm"]] = "last"

Some of these limitations will likely be improved as the project progresses.

TLDR;

AutoGen Studio: Use AutoGen Studio if your application or prototype relies on demonstrating basic/simple capabilities in a UI (two agents conversing, or GroupChat)

AutoGen: Use AutoGen if your application requires complex behaviors - e.g., custom rag pipelines, use of custom agents, custom orchestration and agent reply logic logic etc.

Deploying AutoGen Studio

AutoGen Studio provides a Dockerfile that can be used to spin up a container that launchers the latest autogenstudio build from pypi on a specified port (using gunicorn). Dockerfile here.

You can utilize your favorite container management platform or orchestration tools, such as Docker, Kubernetes, Azure Web App, GCP Cloud Run or App Engine, Amazon ECS (Elastic Container Service), HuggingFace Spaces to deploy AutoGen Studio using the provided Dockerfile.

For example, deploying to HuggingFace spaces, requires port 7680, so a dockerfile may look like the following.

FROM python:3.10

WORKDIR /code

RUN pip install -U gunicorn autogenstudio==0.0.56

RUN useradd -m -u 1000 user

USER user

ENV HOME=/home/user \

PATH=/home/user/.local/bin:$PATH \

AUTOGENSTUDIO_APPDIR=/home/user/app

WORKDIR $HOME/app

COPY --chown=user . $HOME/app

CMD gunicorn -w $((2 * $(getconf _NPROCESSORS_ONLN) + 1)) --timeout 12600 -k uvicorn.workers.UvicornWorker autogenstudio.web.app:app --bind "0.0.0.0:7860"Conclusion

AutoGen studio is designed to provide a UI interface for prototyping multi-agent applications. While it is built using AutoGen, it also introduces additional abstractions that extend the base AutoGen implementation (e.g., the concept of skills and how they are made available to agents). In addition, it supports only a subset of core AutoGen capabilities.

As mentioned earlier, AutoGen studio is under active development (roadmap here).

About the Author

If you found this useful, cite as:

@inproceedings{dibia2024studio, title={AutoGen Studio: A No-Code Developer Tool for Building and Debugging Multi-Agent Systems}, author={Victor Dibia and Jingya Chen and Gagan Bansal and Suff Syed and Adam Fourney and Erkang (Eric) Zhu and Chi Wang and Saleema Amershi}, year={2024}, booktitle={Pre-Print} }

Victor Dibia is a Research Scientist with interests at the intersection of Applied Machine Learning and Human Computer Interaction. Note: The views expressed here are my own opinions / view points and do not represent views of my employer. This article has also benefitted from the referenced materials and authors below.

References

AutoGen Studio Docs https://microsoft.github.io/autogen/docs/autogen-studio/getting-started

Can you expand on your choice to use Gatsby for your front end? If I was to design my own front-end for Autogen would you recommend I use Gatsby? What might some other good choices be? Particularly if I were to host the front end separately...

Could we host Autogen in Huggingface spaces?